Common website indexing issues often stem from duplicate content or crawl errors. Fixing them involves improving content uniqueness and site accessibility.

Ensuring your website is fully indexed by search engines is crucial for visibility and traffic. Indexing problems can severely impact your site’s ability to attract visitors and rank well in search results. Many website owners face challenges with search engines not indexing their site properly, leading to decreased online presence.

These issues can range from technical glitches to content-related problems. Addressing these concerns promptly can significantly enhance your site’s performance on the web. By understanding the common causes behind indexing issues, you can take proactive steps to ensure your website remains visible and appealing to both search engines and users alike. Making your content unique and ensuring your site is easily navigable are key strategies in overcoming these obstacles.

Introduction To Website Indexing and Google Indexing Issues

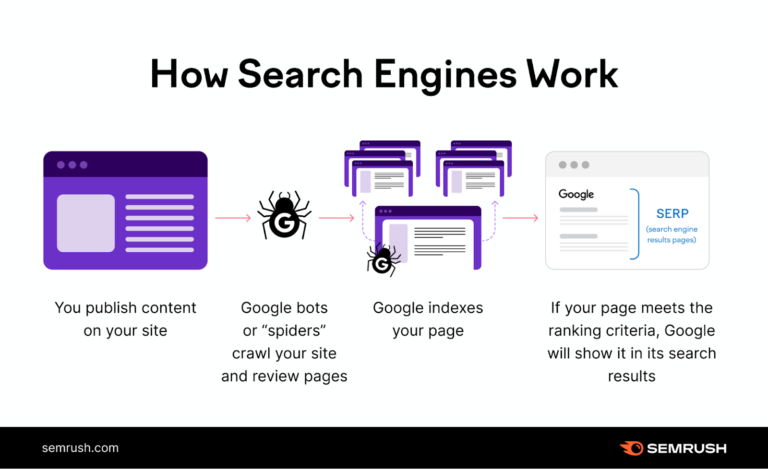

Introduction to Website Indexing starts with understanding how search engines work. A site must be indexed to show up in search results. This process involves search engines visiting your site and storing its content. Proper indexing is crucial for any website’s visibility and success online.

The Importance Of Indexing

Indexing is the key to being found online. Without it, your site is invisible to search engines. Here’s why indexing matters:

- Visibility: Indexed pages can appear in search results.

- Ranking: Search engines rank indexed pages, not those hidden.

- Access: Users find your content when pages are indexed.

Consequences Of Indexing Issues

When indexing fails, your site suffers. Key consequences include:

| Issue | Impact |

|---|---|

| Low Traffic | Fewer visitors reach your site. |

| Poor Ranking | Your site ranks lower in search results. |

| Lost Revenue | Potential sales are missed. |

Crawling Vs. Indexing: Basics You Need To Know

Understanding the difference between crawling and indexing is crucial. Both processes are vital for websites to appear in search engine results. Let’s dive into the basics of each.

What Is Crawling?

Crawling is when search engines look through the web. They use bots to find new and updated content. This content can be a webpage, image, or video. Think of crawling as exploring. Bots go from link to link to discover everything they can on the internet.

What Is Indexing?

After crawling comes indexing. In this stage, search engines organize information. They keep it in huge databases. From there, they can quickly present this data to users. When your page gets indexed, it means it’s in the search engine’s library. It’s like getting a book placed in a library’s shelves.

- Robots.txt file: Tells bots which pages to crawl or ignore.

- Sitemap: A map for search engines. It shows all your website’s important pages.

- Meta tags: Give search engines information about your pages.

To fix indexing issues, make sure your site is easy to crawl. Check your robots.txt file. Make sure it’s not blocking important pages. Also, submit a sitemap to search engines. This helps them find your content faster.

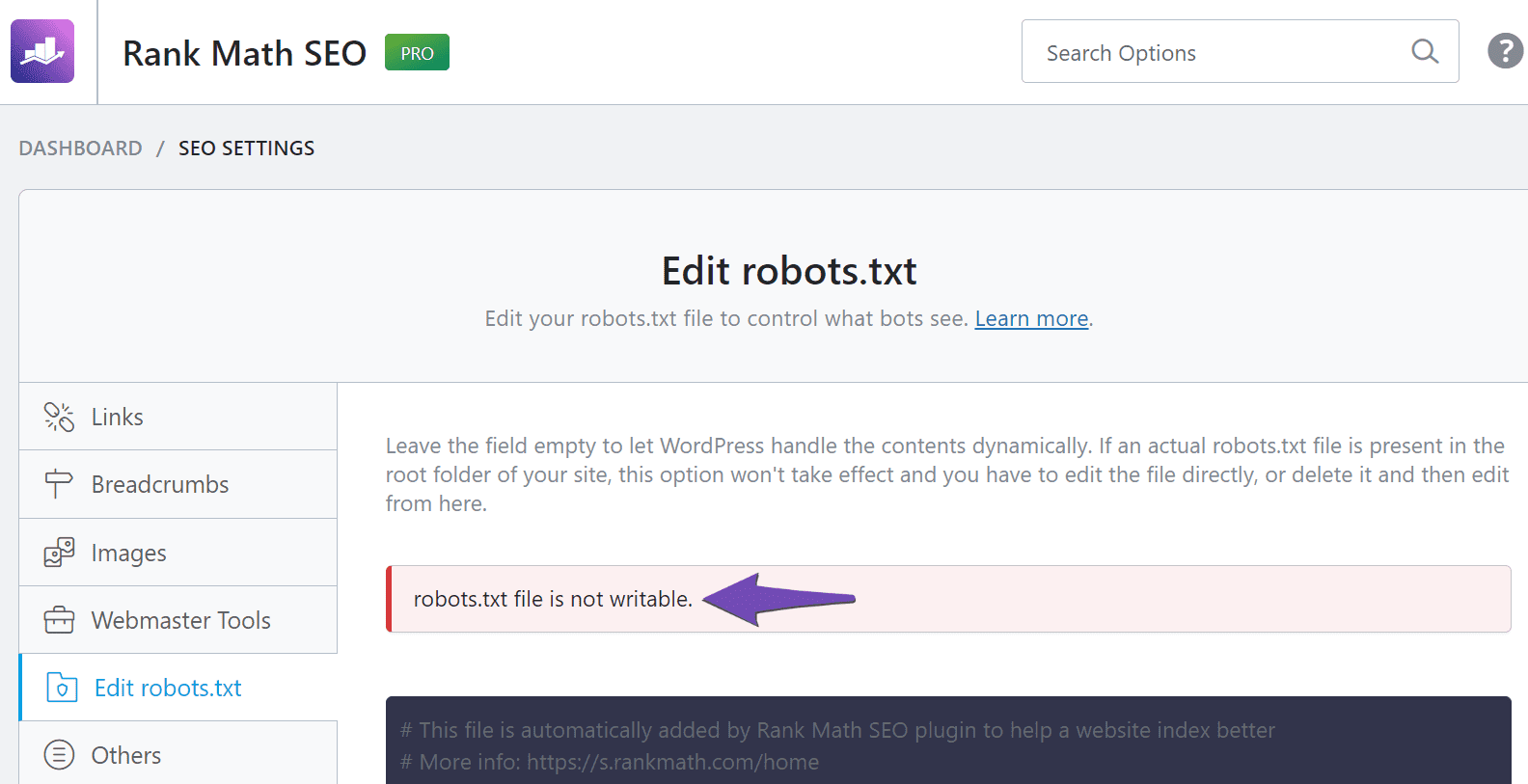

Robots.txt File Blocks for Google Indexing Issues

Robots.txt File Blocks can be a silent culprit in website indexing issues. This small text file guides search engine crawlers through your site. It has the power to allow or block access to specific content. If not configured correctly, it might prevent search engines from indexing your web pages. Let’s explore how to tackle these blocks and keep your site visible on search engines.

Role Of Robots.txt In Indexing

The robots.txt file plays a critical role in indexing. It informs web crawlers which pages to index and which to ignore. A correct setup ensures only relevant content appears in search results. This file must be precise to avoid unintended blocks.

How To Correct Robots.txt Errors

Fixing errors in the robots.txt file is essential for proper indexing. Here are key steps to correct common mistakes:

- Locate your robots.txt file. It should be in the root directory of your website.

- Open the file with a text editor to view the directives.

Check for lines with User-agent: and Disallow:. These lines control crawler access. Make sure they don’t block important pages. If you find errors:

- Remove any

Disallow:lines that reference content you want indexed. - Ensure you’re not disallowing your entire site with a slash (

Disallow: /). - Save your changes and upload the updated file to your server.

Use a robots.txt tester tool to verify your changes. Google Search Console offers a tester to check for issues. After corrections, it can take some time for search engines to re-crawl and index your site. Be patient and monitor progress using your SEO tools.

Sitemap Problems for Google Indexing Issues

Sitemap problems can hinder a website’s visibility to search engines. A sitemap guides search engines through a website. It lists URLs and helps with faster indexing. But issues can arise. These issues may include outdated URLs or missing pages. Let’s explore how to create and update sitemaps for better indexing.

Creating An Effective Sitemap

An effective sitemap acts like a map for search engines. It must be well-structured and complete. Here are steps to ensure your sitemap meets these criteria:

- Include all pages you want indexed

- Exclude pages that are not useful to searchers

- Check for errors in URLs

- Use a sitemap generator for accuracy

Ensure your sitemap is in XML format. This is the standard for search engines.

Updating Your Sitemap For Better Indexing

Regular updates to your sitemap keep search engines informed. Here’s how to keep your sitemap current:

- Add new pages immediately

- Remove outdated URLs

- Check for errors after making changes

- Submit your updated sitemap to search engines

Use webmaster tools to submit your sitemap. This ensures search engines recognize updates.

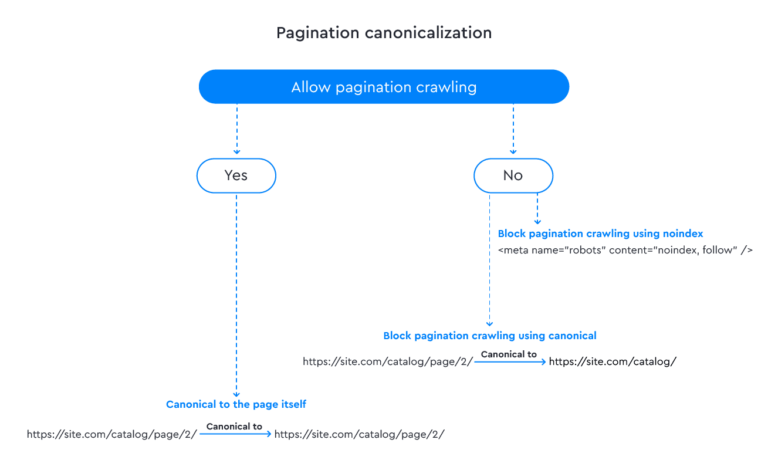

Duplicate Content Dilemmas

Duplicate Content Dilemmas can hurt your website’s SEO ranking. This problem happens when the same content appears in more than one place on the internet. It confuses search engines. They struggle to decide which version to show. Let’s dive into how to spot and fix these issues.

Identifying Duplicate Content

Finding duplicate content requires a few steps:

- Use tools like Copyscape or Siteliner. They find copies online.

- Check Google’s Search Console for notices about duplicate content.

- Look for URLs with similar content within your site.

Remember, some duplicate content is normal. For example, printer-friendly pages.

Solutions For Duplicate Content

Once you find duplicates, it’s time to fix them. Here’s how:

- 301 Redirects: Redirect all duplicates to the original page.

- Canonical Tags: Tell search engines which page is “main.”

- Update Content: Rewrite or remove duplicates.

- Use Parameter Handling: In Google Search Console, set URL parameters.

Fixing duplicate content boosts your site’s SEO. Start today!

Credit: prerender.io

Meta Tags And Directives

Meta Tags and Directives play crucial roles in website indexing. They communicate with search engines, guiding them on how to handle your content. Correct use ensures better visibility. Misuse or neglect can hide your site from search results.

Meta Tags That Affect Indexing

Meta tags provide search engines with metadata about your web pages. Some tags influence indexing directly. Let’s explore these important tags:

- Robots Meta Tag: Controls crawler access to your page.

- Noindex: Tells search engines not to index the page.

- Nofollow: Advises against following links on the page.

- Noarchive: Prevents cached versions of the page.

Best Practices For Meta Tags And Directives

Follow these best practices to ensure proper indexing:

- Use

for indexable content. - Apply noindex strategically on duplicate or private pages.

- Ensure canonical tags are correct to avoid duplicate content issues.

- Keep meta tags updated and relevant to page content.

Remember, consistent and correct implementation of meta tags and directives enhances visibility and indexing.

Server And Connectivity Issues

Server and connectivity issues can block search engines from accessing a website. This leads to indexing problems. A stable server connection is vital. Let’s explore common server errors and how to ensure stability for indexing.

Common Server Errors

Websites may face several server-related errors. These include the infamous 500 Internal Server Error and the 503 Service Unavailable Error. Both disrupt website access.

- 500 Error: A generic error when the server fails.

- 503 Error: Happens during server overloads or maintenance.

To fix these, check server logs. They provide error details. Contact your hosting provider for help. They can offer solutions.

Ensuring Server Stability For Indexing

A stable server ensures consistent website access. This helps with indexing.

Monitor server uptime. Use tools like Uptime Robot. It checks your site’s status regularly.

Optimize server performance. Upgrade hosting plans if necessary. Consider using a Content Delivery Network (CDN). It reduces load times.

Regularly test server response times. Use Google’s PageSpeed Insights for this. Fast response times improve indexing chances.

Lastly, maintain regular backups. They prevent data loss during outages. This keeps your site index-ready.

Content Quality And Relevance

Google loves quality content. Websites need strong content to rank well. Poor content often fails to index. Let’s explore content quality and its role in indexing.

How Content Quality Impacts Indexing

Search engines aim to provide the best results. They prefer content that is:

- Original – Unique, not copied

- Useful – Answers user queries

- Engaging – Keeps readers interested

- Well-structured – Easy to read and understand

Content failing these points gets poor indexing. High-quality content gets better visibility.

Improving Content For Better Indexing

Focus on these areas to improve content:

- Perform Keyword Research: Find terms users search for.

- Create Valuable Content: Solve problems or answer questions.

- Ensure Readability: Short sentences. Simple words.

- Use Multimedia: Add images, videos for engagement.

- Update Regularly: Keep content current and relevant.

Remember to review and refine content often. Quality content earns better indexing and more traffic.

Utilizing Google Search Console

Google Search Console is a vital tool for webmasters. It helps you understand how Google views your site. You can also troubleshoot common indexing issues. Let’s dive into how to use Google Search Console to keep your site fully indexed.

Monitor Your Indexing Status

Google Search Console provides an ‘Index Coverage’ report. This report shows which pages are indexed and which are not. It also explains why some pages might be missing. To check this report:

- Sign in to Google Search Console.

- Select your website.

- Click on ‘Coverage’ under the ‘Index’ section.

Review the details here. Fix the issues to improve your site’s indexing.

Submitting Pages For Reindexing

Sometimes, you need to prompt Google to reindex a page. This could be after updating content or fixing issues. Here’s how:

- Go to Google Search Console.

- Type the URL of the page into the ‘Inspect any URL’ bar.

- Press ‘Enter’ and wait for the page inspection to complete.

- If the page is not indexed, click ‘Request indexing’.

Note: Google limits the number of pages you can submit per day. Use this feature wisely.

Credit: rankmath.com

Advanced Techniques For Troubleshooting

Exploring advanced techniques helps fix tricky website indexing issues. Find out how log files and diagnostic tools can pinpoint problems.

Using Log Files To Understand Indexing Issues

Log files are treasure maps for SEO experts. They track search engine crawls. Analyze them to spot indexing roadblocks.

Here’s how to use log files effectively:

- Identify crawl frequency: Check how often search engines visit.

- Spot crawl errors: Look for 4xx and 5xx status codes.

- Analyze user agents: Ensure Googlebot isn’t blocked.

Tools like Screaming Frog Log File Analyzer simplify this process.

Leveraging Third-party Tools For Diagnostics

Third-party tools offer deep insights into indexing. They reveal issues invisible to naked eyes.

Best tools for diagnostics include:

- Google Search Console: Monitors Google’s view of your site.

- Ahrefs: Tracks backlinks and keywords.

- Moz: Offers SEO audit features.

Use these tools to get a clear indexing picture. They help fix issues fast.

Case Studies: Successful Indexing Revamps

Let’s dive into ‘Case Studies: Successful Indexing Revamps’. These stories show us how real websites fixed their indexing issues. They teach us valuable lessons. We will look at examples and learn from their success.

Real-world Examples Of Indexing Fixes

Many websites have faced and overcome indexing challenges. Here are their stories:

- Online Retailer Boosts Sales: An e-commerce site had indexing issues. They couldn’t find their products on Google. They updated their sitemap and used

robots.txtwisely. Soon, Google indexed their pages, and sales increased. - Blog Gains More Readers: A popular blog lost traffic because of indexing errors. They fixed broken links and improved site speed. Their stories got indexed again, bringing back readers.

Key Takeaways From Indexing Success Stories

These stories teach us important lessons:

- Check Your Sitemap: Make sure your sitemap is up-to-date. It guides search engines through your site.

- Use robots.txt Wisely: This file tells search engines what to index. Use it to hide pages you don’t want indexed.

- Fix Broken Links: Broken links stop search engines. Fix them to help indexing.

- Improve Site Speed: Fast sites are easy to index. Make your site quick.

Following these steps can help fix indexing issues. They lead to better visibility online.

Credit: www.gigde.com

Conclusion: Maintaining Indexing Health

Keeping your website easy to find is like caring for a garden. Just like plants need water and sunlight, your website needs regular checks to stay visible. Let’s explore how you can keep your website’s indexing health in top shape.

Regular Monitoring And Maintenance

Checking your website often is key. It’s like looking under the hood of a car. You want to catch any issues before they grow. Use tools like Google Search Console to spot problems early. This approach helps you fix small issues before they become big.

- Review your website’s indexing status regularly.

- Fix broken links and errors as soon as you find them.

- Update content to keep it fresh and engaging.

Staying Updated With Search Engine Changes

Search engines update their rules often. Staying informed helps you adapt. Follow SEO news and apply updates to keep your website in line with new guidelines.

- Read SEO blogs and forums.

- Attend webinars and workshops about SEO.

- Apply new SEO strategies to your website.

To sum up, maintaining your website’s visibility is an ongoing task. Regular checks and updates ensure your site stays easy to find. Treat your website like a garden. Give it the care it needs to grow and thrive.

Frequently Asked Questions

Why Is My Website Not Showing Up In Search Results?

There may be several reasons, such as the site being new, having crawl errors, or a noindex tag. Verify your site with search engines, submit a sitemap, and check for noindex directives.

How Do I Fix Crawl Errors On My Site?

Identify crawl errors in your website’s search console and address them by ensuring all URLs are correct and accessible. Update your sitemap and resubmit it to search engines for re-crawling.

What Is A Noindex Tag And How Does It Affect Indexing?

A noindex tag instructs search engines not to index a page. If present, remove it from your site’s HTML or HTTP header to allow indexing. Check your robots. txt file and meta tags for noindex directives.

Can Server Errors Impact Website Indexing?

Yes, server errors like 5xx responses can hinder a search engine’s ability to index your site. Ensure your server is stable and pages are loading correctly to facilitate proper indexing.

Conclusion

Navigating website indexing issues can seem daunting. Yet, with the strategies covered, you can enhance your site’s visibility. Remember, regular audits and swift fixes safeguard your online presence. Stay vigilant, update content, and watch your website thrive in search engine rankings.