Google crawl rate limits determine how often Googlebot visits your website. This affects how quickly updates appear in search results.

Understanding Google’s crawl rate limits is crucial for website owners. These limits influence how often your pages get indexed and how search engines perceive your content. A high crawl rate can lead to faster indexing, improving visibility and organic traffic.

Conversely, a low crawl rate might delay updates and impact your site’s ranking. Factors like site structure, server performance, and content quality all play a role. Optimizing these elements can enhance your crawl rate and ensure timely indexing. By managing crawl limits effectively, you can significantly boost your site’s presence in search engine results.

Introduction To Google Crawl Rate

The Google Crawl Rate is crucial for your website’s visibility. It defines how often Googlebot visits your site. A higher crawl rate means more frequent indexing. This can help your pages appear in search results faster.

Understanding the crawl rate helps optimize your website. It allows you to manage server load. You can also prioritize important content. This section explores the role of Googlebot and the basics of crawl rate.

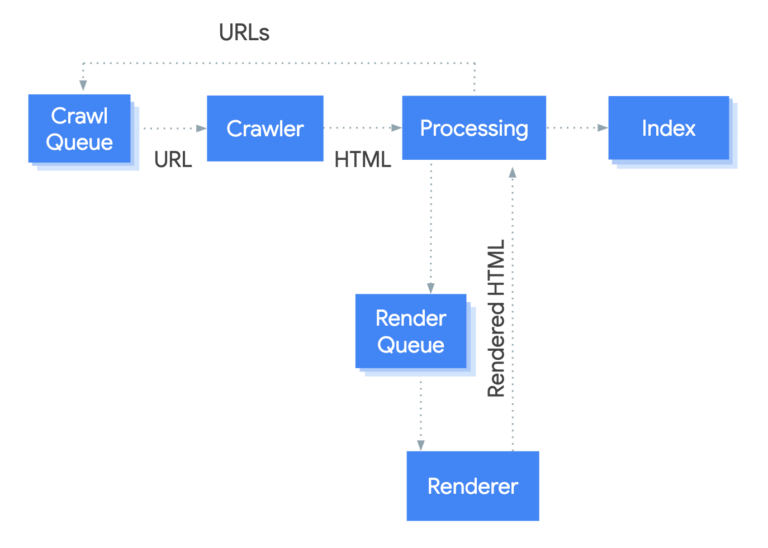

The Role Of Googlebot

Googlebot is Google’s web crawler. It scans websites to gather information. This information helps Google index content for search results.

- Finds new pages

- Updates existing pages

- Identifies broken links

Googlebot works continuously. It follows links from page to page. This process helps Google understand the structure of your site.

Crawl Rate Basics

Crawl rate refers to how often Googlebot visits your site. This is influenced by several factors:

| Factor | Description |

|---|---|

| Site Health | A well-optimized site gets crawled more. |

| Server Performance | Faster servers allow more frequent crawling. |

| Content Updates | Regular updates attract more crawl visits. |

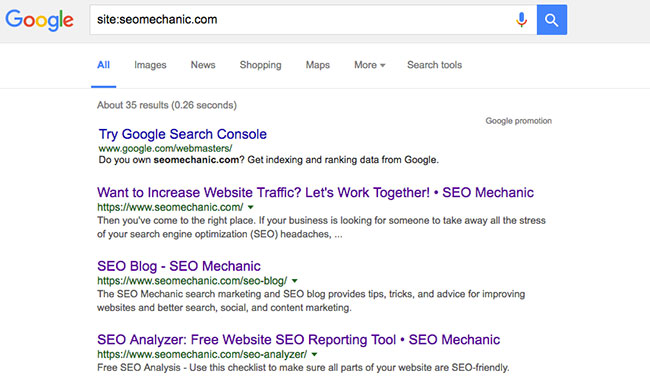

Monitor your crawl rate using Google Search Console. This tool provides insights into how often Googlebot visits your pages. You can also set limits on how much bandwidth Googlebot uses.

Managing your crawl rate is important for SEO success. A balanced approach ensures better indexing and user experience.

Impact On Seo

Understanding how Google’s crawl rate limits affect your website is crucial. These limits can directly impact your site’s visibility and performance in search results. Let’s explore this topic in detail.

Crawl Rate And Site Indexing

The crawl rate refers to how often Googlebot visits your site. This rate influences how quickly your new content gets indexed. If Googlebot crawls your site frequently, it finds new pages faster.

Factors affecting crawl rate include:

- Server response time

- Website structure

- Number of internal links

Here’s a simple table showing how crawl rate affects site indexing:

| Crawl Rate | Indexing Speed | Content Visibility |

|---|---|---|

| High | Fast | Improved |

| Medium | Moderate | Average |

| Low | Slow | Poor |

Implications For Search Ranking

A higher crawl rate can lead to better search rankings. If Google indexes your pages quickly, they can appear in search results sooner. This boosts your chances of attracting visitors.

Key implications include:

- Faster updates to search results

- Better chances for new content visibility

- Improved user experience

Low crawl rates can hinder your SEO efforts. Your site may not rank well if Google cannot access your content. Focus on improving your crawl rate to enhance your overall SEO strategy.

Factors Influencing Crawl Rate

Understanding the factors that affect your crawl rate is vital. Several elements play a role in how often Googlebot visits your site. By optimizing these factors, you can improve your visibility on search engines.

Site Structure And Navigation

A well-organized site structure helps Googlebot crawl efficiently. Here are key elements:

- Clear hierarchy: Use categories and subcategories.

- Internal linking: Connect relevant pages for easy access.

- Sitemaps: Submit XML sitemaps to guide crawlers.

- Breadcrumbs: Enhance navigation for users and bots.

These strategies help Google understand your site better. A logical layout leads to more frequent crawls.

Page Load Time And Server Health

Page load time affects user experience and crawl rate. Slow-loading pages can frustrate users and bots. Consider these factors:

| Factor | Impact on Crawl Rate |

|---|---|

| Load Speed: | Slow sites get crawled less often. |

| Server Uptime: | Frequent downtimes reduce crawl frequency. |

| HTTP Errors: | Errors can block crawlers from accessing pages. |

Optimize page load speed to keep users happy. Ensure server health to maintain a steady crawl rate. Regularly check for errors to avoid blocking access.

Credit: colorwhistle.com

Optimizing Website For Better Crawling

Optimizing your website for better crawling helps search engines find your content. Google’s crawl rate limits can affect how often your site gets indexed. Improving your site’s structure, content, and user experience enhances crawl efficiency.

Enhancing Content Quality

High-quality content attracts search engines. Focus on creating relevant, engaging material. Here are some tips:

- Use clear, simple language.

- Write unique articles and blog posts.

- Include relevant keywords naturally.

- Update old content regularly.

- Use images and videos to enhance understanding.

Content should answer user questions. Use headings and bullet points for easy reading. Google prefers content that provides value.

Improving User Experience

A good user experience keeps visitors on your site longer. This helps with crawling. Here are ways to improve:

- Ensure fast loading times.

- Make navigation easy and intuitive.

- Use responsive design for mobile users.

- Reduce pop-ups that annoy visitors.

- Include a search function for easy access.

Happy users spend more time on your site. This signals Google to crawl your site more often. Optimize your website layout for better engagement.

| Factor | Impact on Crawling |

|---|---|

| Content Quality | Higher chances of getting indexed. |

| User Experience | Increases dwell time and crawl frequency. |

| Site Speed | Improves crawl efficiency. |

| Mobile Responsiveness | Essential for Google’s mobile-first indexing. |

Leveraging Crawl Rate Settings In Google Search Console

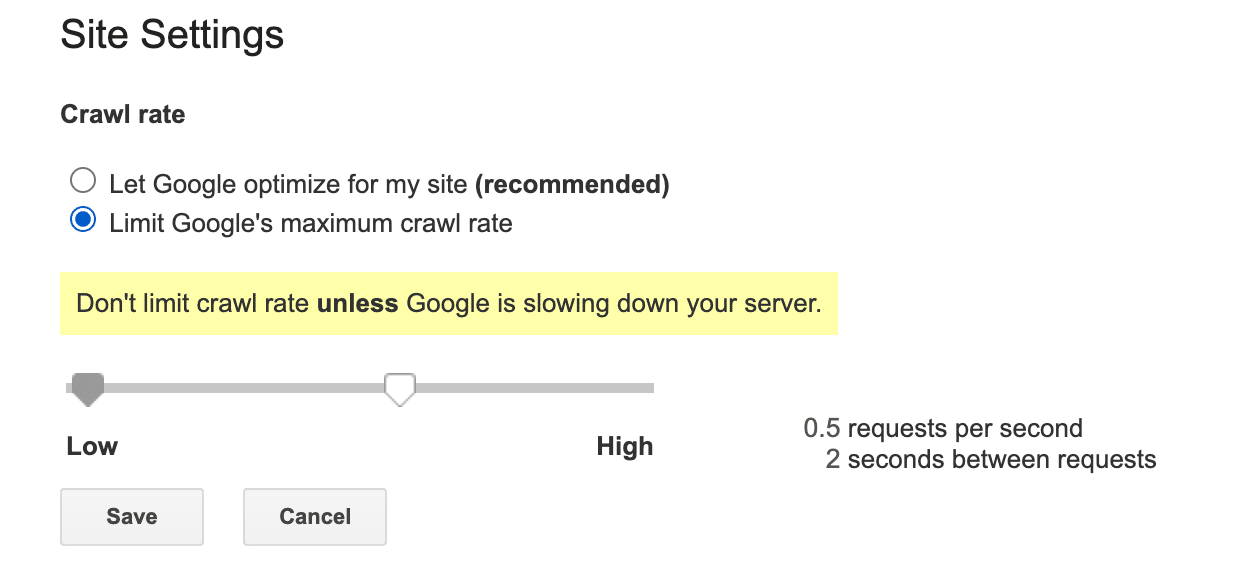

Understanding and managing your website’s crawl rate is crucial. Google Search Console offers tools to help with this. Proper settings can boost your website’s visibility. Adjusting crawl rate settings can improve how Google indexes your pages.

Setting Preferred Crawl Rate

Setting the preferred crawl rate is essential for website performance. Google allows you to adjust this setting. Follow these steps to set your preferred crawl rate:

- Log into your Google Search Console account.

- Select your website property.

- Go to the “Settings” section.

- Click on “Crawl rate.”

- Choose your preferred rate: Slow, Normal, or Fast.

Choosing a slower rate helps during high-traffic periods. A faster rate can improve indexing for new content.

Monitoring Crawl Stats

Monitoring crawl stats is vital for effective website management. Google Search Console provides valuable insights. Use these stats to identify issues and optimize crawling.

Key metrics to track include:

- Total crawl requests

- Crawl errors

- Pages crawled per day

- Response times

| Crawl Metric | Importance |

|---|---|

| Total Crawl Requests | Indicates how often Google visits your site. |

| Crawl Errors | Shows problems Google faced while crawling. |

| Pages Crawled Per Day | Measures how many pages Google indexes daily. |

| Response Times | Reflects how quickly your server responds. |

Regularly reviewing these metrics helps you spot trends. You can make informed decisions to enhance your site’s performance.

The Importance Of Sitemaps

Sitemaps play a crucial role in helping Google crawl your website. They guide search engines to discover and index your pages efficiently. A well-structured sitemap can improve your site’s visibility. This is essential for increasing organic traffic and enhancing user experience.

Sitemap Creation And Submission

Creating a sitemap is straightforward. Follow these steps:

- Choose a sitemap format: XML or HTML.

- List all important pages on your website.

- Include metadata like last modified date.

- Use online tools or plugins to generate the sitemap.

After creating your sitemap, submit it to Google Search Console. This helps Google find your sitemap quickly. Here’s how:

- Log in to Google Search Console.

- Select your property.

- Go to the Sitemaps section.

- Enter your sitemap URL and click “Submit.”

Sitemap Updates And Management

Regular updates to your sitemap are necessary. This ensures search engines have the latest content. Consider these tips for effective management:

- Update your sitemap whenever you add or remove pages.

- Check for broken links and fix them promptly.

- Use a plugin to automate sitemap generation.

Monitor your sitemap’s performance in Google Search Console. Look for crawl errors and fix them quickly. Regular management keeps your website optimized for search engines. This helps maintain a high crawl rate.

Addressing Crawl Errors

Crawl errors can hurt your website’s performance. They stop Google from accessing your content. Fixing these errors boosts your SEO. Let’s explore how to detect and resolve them.

Detecting Common Crawl Issues

Finding crawl issues is the first step. Use tools like Google Search Console. This tool alerts you to errors. Common errors include:

- 404 Errors: Pages not found.

- Server Errors: Server issues prevent access.

- Redirect Errors: Problems with page redirects.

- Blocked Resources: Files blocked by robots.txt.

Check your website regularly. This helps catch issues early. Look for patterns in error reports. Addressing these quickly can improve crawl rates.

Resolving Errors For Seo Gains

Fixing crawl errors leads to better SEO results. Here’s how:

- Fix 404 Errors: Redirect to relevant pages.

- Resolve Server Errors: Check your server settings.

- Update Redirects: Ensure all redirects work correctly.

- Edit robots.txt: Allow Google to access important files.

Use the following table to track your progress:

| Error Type | Fix | Status |

|---|---|---|

| 404 Error | Redirect or create a new page | Pending |

| Server Error | Check server log | Resolved |

| Redirect Error | Update redirect link | Pending |

| Blocked Resource | Edit robots.txt file | Resolved |

Regularly review your progress. Fixing crawl errors enhances your website’s visibility. Better visibility leads to more visitors. Keep your site healthy for optimal performance.

Credit: searchengineland.com

Case Studies And Success Stories

Understanding how crawl rate limits affect your website can lead to success. Many businesses have optimized their crawl rates. These adjustments often result in better rankings and increased traffic.

Businesses Benefiting From Optimized Crawl Rates

Several companies have seen improvements after optimizing their crawl rates. Here are a few examples:

| Business Name | Before Optimization | After Optimization | Result |

|---|---|---|---|

| ShopSmart | Slow updates | Faster indexing | 25% traffic increase |

| EcoGoods | Low visibility | Higher crawl rate | 30% more sales |

| TechTrends | Stagnant rankings | Improved crawl efficiency | Top 3 search results |

Lessons From Crawl Rate Adjustments

Learning from successful businesses helps others improve too. Here are key lessons:

- Monitor crawl stats: Regularly check your Google Search Console.

- Optimize website speed: Faster sites attract more crawlers.

- Reduce server errors: Fix issues that block crawlers.

- Update content regularly: Fresh content encourages crawlers.

Implement these strategies for better crawl rates. Success stories show that small changes make a big difference. Make your website friendly for search engines.

Future-proofing Seo Strategy

Future-proofing your SEO strategy is crucial for online success. Google’s crawl rate limits impact how often your site gets indexed. Understanding these limits helps you stay competitive. Adapting quickly can lead to better search rankings and more traffic.

Adapting To Algorithm Changes

Google frequently updates its algorithms. Each change can affect your website’s visibility. Here are some key strategies to adapt:

- Monitor updates: Keep an eye on Google’s announcements.

- Analyze your data: Use tools like Google Analytics.

- Optimize content: Ensure it meets the latest SEO guidelines.

- Improve site speed: Faster sites get crawled more often.

Regular updates to your website help maintain relevancy. Fresh content attracts Google’s crawlers. This boosts your chances of ranking higher.

Staying Ahead With Proactive Crawl Management

Effective crawl management ensures your site performs well. Here are some proactive measures:

| Strategy | Description |

|---|---|

| XML Sitemap | Submit an XML sitemap to Google Search Console. |

| Robots.txt | Control which pages Google can crawl. |

| Monitor Crawl Stats | Track how often Google crawls your site. |

| Fix Broken Links | Repair any broken links to improve user experience. |

Implementing these strategies can enhance your website’s visibility. Focus on quality content and user experience. This keeps visitors engaged and encourages return traffic.

Credit: support.google.com

Frequently Asked Questions

How Does Crawl Rate Affect My Website’s Seo?

Crawl rate directly impacts your website’s SEO by determining how often search engines index your pages. A higher crawl rate means more frequent updates, which can lead to better visibility. However, excessive crawling can overload your server, causing slowdowns. It’s essential to find a balance for optimal performance.

What Is Crawl Budget In Seo?

Crawl budget is the number of pages search engines crawl on your site within a specific time frame. It varies based on the site’s authority, structure, and update frequency. Efficiently managing your crawl budget ensures that search engines prioritize your most important pages, enhancing their chances of being indexed.

How Can I Improve My Site’s Crawl Rate?

To improve your site’s crawl rate, focus on optimizing your website’s speed and server response times. Create a clean URL structure and submit an updated sitemap to search engines. Regularly update content and remove broken links. These steps encourage search engines to crawl your site more efficiently.

What Factors Influence Google’s Crawl Rate Limits?

Several factors influence Google’s crawl rate limits, including server health, page load speed, and site architecture. Sites with higher authority and quality content often enjoy better crawl rates. Additionally, if your server frequently experiences downtime, it may lead to a reduced crawl rate as Google prioritizes reliable sites.

Conclusion

Understanding Google’s crawl rate limits is essential for optimizing your website’s visibility. These limits impact how often your pages are indexed. By managing your site’s performance and ensuring quality content, you can enhance your crawl rate. Focus on these strategies to improve your search rankings and drive more traffic.