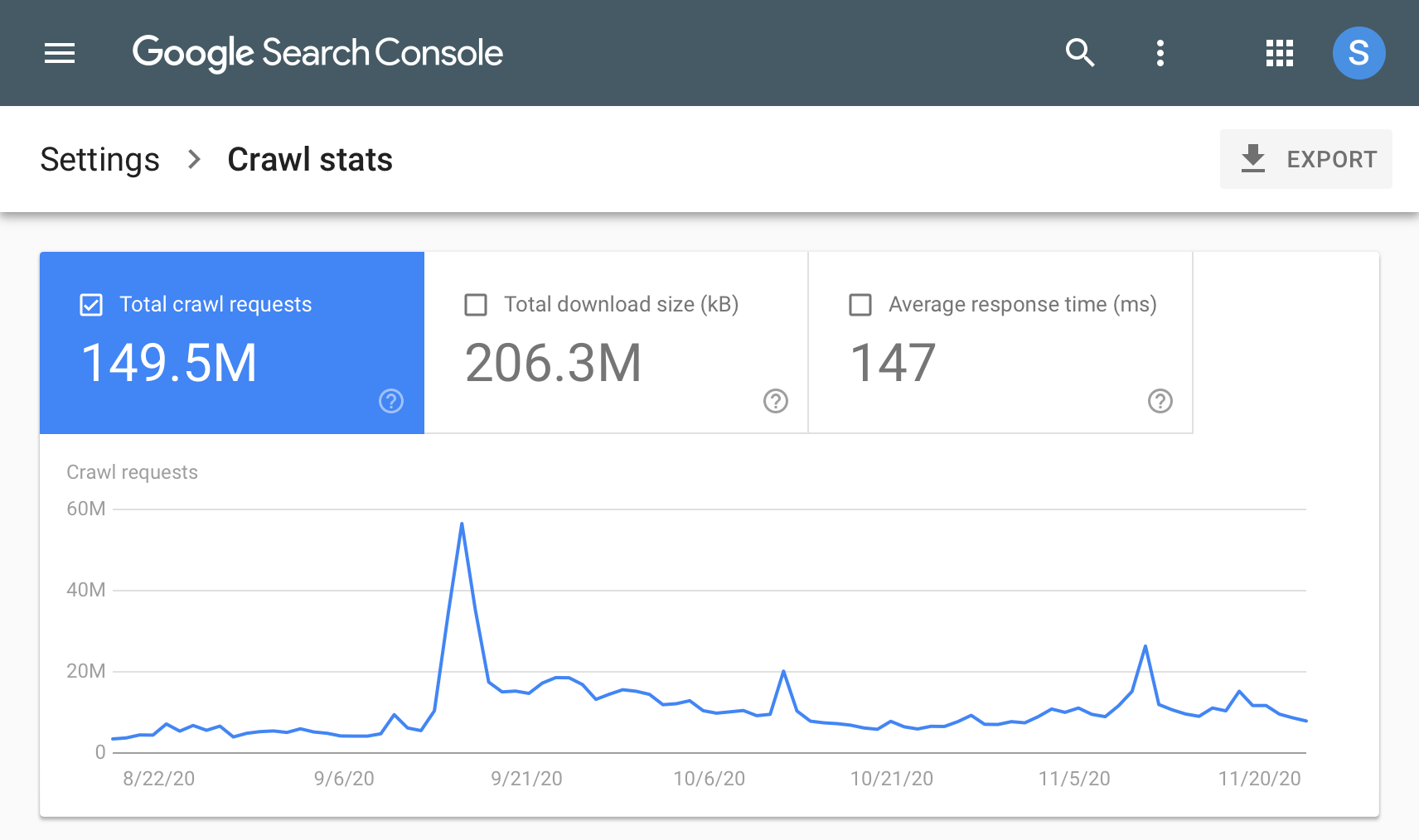

To monitor Google’s crawl rate, use Google Search Console’s Crawl Stats report. Improve it by optimizing your website’s structure and performance.

Monitoring and improving Google’s crawl rate is essential for effective SEO. A higher crawl rate ensures that Google indexes your content quickly, leading to better visibility in search results. Regularly checking the Crawl Stats report in Google Search Console helps you understand how often Googlebot visits your site.

Optimizing your website’s structure, enhancing page speed, and regularly updating content are key strategies to improve the crawl rate. By focusing on these aspects, you ensure that your site remains accessible and relevant to both users and search engines, driving better overall performance and higher rankings.

Introduction To Crawl Rate

Understanding Google’s crawl rate is crucial for your website’s performance. It impacts how search engines index your content. This section will explain the basics of crawl rate and its importance for SEO.

What Is Crawl Rate?

Crawl rate is the frequency at which Googlebot visits your site. It determines how often your pages are indexed. A higher crawl rate means more frequent updates to your site’s index.

Googlebot uses complex algorithms to decide crawl rate. Factors include your site’s quality and structure. A well-optimized site often enjoys a higher crawl rate.

Importance For Seo

A good crawl rate is vital for SEO. It ensures new content is indexed quickly. This helps your site rank better in search results.

Slow crawl rates can delay indexing. This affects your site’s visibility. Monitoring and improving crawl rate can boost your SEO efforts.

How To Monitor Crawl Rate?

Monitoring your crawl rate involves using Google Search Console. This tool provides insights into your site’s crawl statistics. Follow these steps to check your crawl rate:

- Login to Google Search Console.

- Select your website property.

- Navigate to the “Crawl” section.

- Click on “Crawl Stats” to view detailed reports.

Regularly monitoring these stats helps identify issues. It also provides data to make necessary improvements.

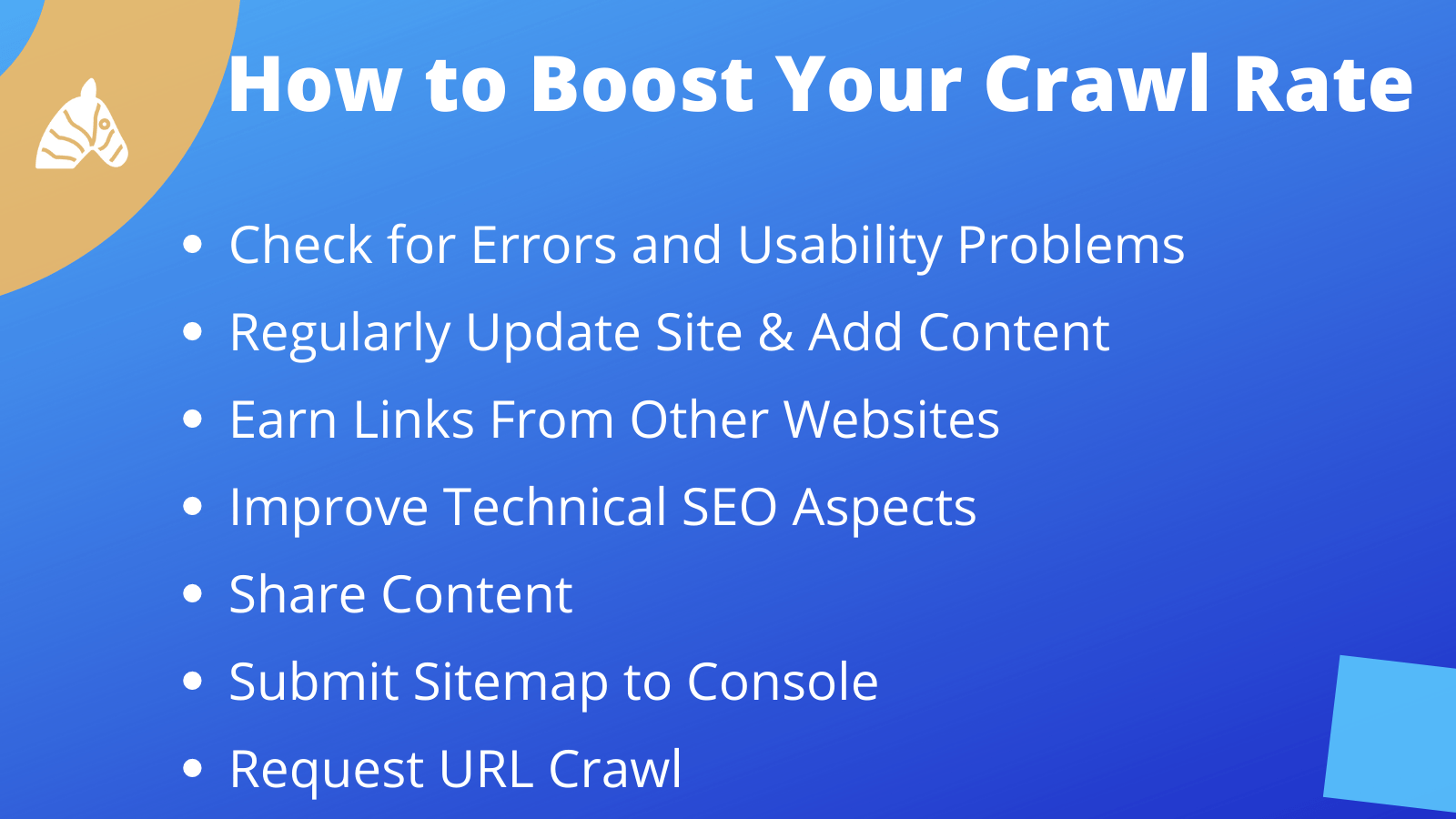

Tips To Improve Crawl Rate

Improving your crawl rate involves several strategies. Here are some effective tips:

- Optimize Page Load Speed: Faster pages are crawled more frequently.

- Update Content Regularly: Fresh content attracts more frequent crawls.

- Fix Broken Links: Broken links can hinder Google’s crawling process.

- Improve Internal Linking: Good internal links help Googlebot navigate your site.

- Submit Sitemap: A sitemap helps Googlebot find all your pages.

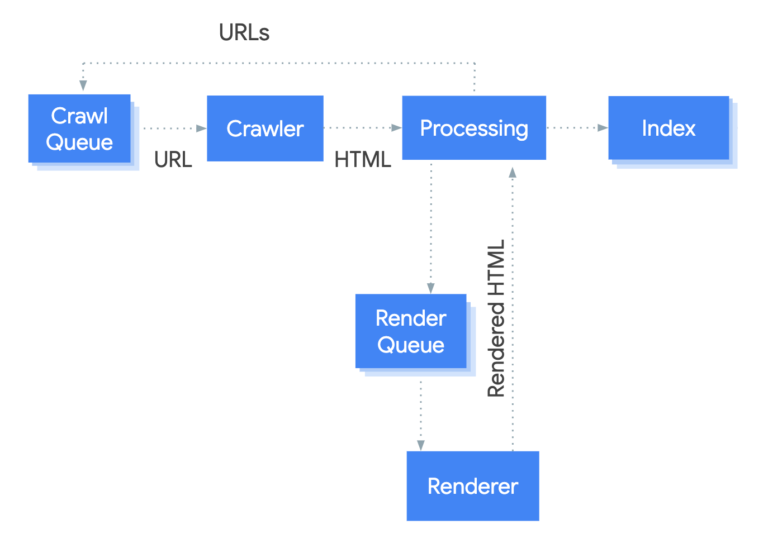

Google’s Crawling Mechanism

Understanding Google’s Crawling Mechanism is essential for optimizing your website’s visibility. Google uses a special process to find and index web pages.

How Googlebot Works

Googlebot is Google’s web crawler. It travels across the internet to find new and updated pages.

Googlebot uses a vast list of URLs. This list comes from past crawls and sitemaps provided by webmasters. The bot then visits these URLs, and each visit is called a crawl.

During the crawl, Googlebot looks at the content and layout of each page. It does this to understand the page’s context and relevance. It follows links from these pages to discover new URLs.

Factors Influencing Crawling

Several factors influence how often Google crawls your site.

- Site Structure: A well-structured site is easier to crawl.

- Page Speed: Faster pages get crawled more often.

- Content Updates: Regularly updated content attracts more crawls.

- Internal Linking: Effective internal linking helps crawlers find pages.

- Backlinks: More quality backlinks can lead to increased crawling.

| Factor | Impact |

|---|---|

| Site Structure | Improves crawl efficiency |

| Page Speed | Increases crawl rate |

| Content Updates | Encourages frequent crawls |

| Internal Linking | Helps discover new pages |

| Backlinks | Boosts crawl priority |

By understanding these factors, you can optimize your site for better crawling. This leads to improved search engine visibility and ranking.

Tools For Monitoring Crawl Rate

Monitoring your website’s crawl rate is essential for SEO success. Using the right tools can make this task easier and more efficient. Below, we explore some of the best tools for monitoring and improving your Google crawl rate.

Google Search Console

Google Search Console is a free tool provided by Google. It helps webmasters monitor website performance. It also offers insights into how Google crawls your site.

- Submit a sitemap to help Google understand your site’s structure.

- Check crawl statistics to see how often Googlebot visits.

- Identify crawl errors and fix them promptly.

- Use the “URL Inspection Tool” to check individual pages.

Google Search Console is user-friendly. It’s a must-have for any webmaster.

Third-party Tools

Third-party tools can offer additional insights. They complement Google Search Console’s features. Here are some popular options:

| Tool | Features | Pricing |

|---|---|---|

| Ahrefs | Site Audit, Crawl Report, Health Score | Paid |

| SEMrush | Site Audit, Crawl Analysis, Error Fixes | Paid |

| Screaming Frog | Site Crawling, Error Detection, SEO Insights | Free & Paid |

These tools offer more detailed reports. They also provide actionable insights.

Using both Google Search Console and third-party tools is beneficial. Each tool offers unique features. Together, they provide a comprehensive view of your website’s crawl rate.

Analyzing Crawl Stats

Understanding your website’s crawl stats is crucial for SEO success. Google uses these stats to determine how often it visits and indexes your site. By analyzing this data, you can identify areas for improvement. This helps ensure your site remains visible in search results.

Interpreting Crawl Data

First, access your crawl data through Google Search Console. Look for the total number of requests made by Googlebot. This shows how often Google visits your site. Pay attention to the average response time. Faster response times mean a better user experience and higher rankings.

Examine the crawl frequency. This indicates how frequently Google crawls your pages. Higher frequency usually means your content is fresh and relevant. Also, review the crawl volume to see the number of kilobytes downloaded per day. This helps you understand the size of data Google processes.

Identifying Patterns

Look for patterns in your crawl data. Identify which pages get crawled most often. These are likely your most important pages. If you see some pages are rarely crawled, they may need optimization. Use this information to prioritize your SEO efforts.

Create a table to track your data over time. This can help you see trends and make informed decisions:

| Date | Total Requests | Average Response Time | Crawl Frequency | Crawl Volume |

|---|---|---|---|---|

| 01/01/2023 | 500 | 200ms | Daily | 300KB |

| 01/02/2023 | 600 | 180ms | Daily | 350KB |

Use your findings to improve your site’s structure and content. Ensure important pages are easy to access and load quickly. This will help Google crawl your site more effectively.

Improving Crawl Rate

Improving Google’s crawl rate is crucial for better site visibility. A higher crawl rate means your pages get indexed faster. This can lead to improved search engine rankings. Below are some strategies to help boost your site’s crawl rate.

Optimizing Site Structure

A well-structured site makes it easier for Google to crawl. Ensure your website has a clear hierarchy. This means having a proper arrangement of pages and sub-pages.

Use breadcrumbs to show the path of pages. This helps users and bots navigate your site.

- Use internal links to connect related pages.

- Keep your URL structure simple and readable.

- Ensure your sitemap is up-to-date and submitted to Google Search Console.

Enhancing Page Load Speed

Page load speed is a significant factor in crawl rate. Faster pages get crawled more frequently.

Here are some tips to enhance your page load speed:

- Optimize images by compressing them.

- Use browser caching to store static resources.

- Minimize HTTP requests by combining files.

- Leverage Content Delivery Networks (CDNs) to serve content faster.

Ensure your site is mobile-friendly. Google prioritizes mobile-first indexing.

Managing Crawl Budget

Managing your website’s crawl budget is essential for SEO. It ensures Google efficiently indexes your important pages. This can lead to better rankings and increased organic traffic.

What Is Crawl Budget?

Crawl budget is the number of pages Googlebot crawls and indexes on your site. This depends on your site’s size and health.

Google allocates a specific crawl budget to each site. Large sites need a higher crawl budget. Small sites need less.

Two main factors influence crawl budget:

- Crawl Rate Limit: The limit on how many requests per second Googlebot makes to your site.

- Crawl Demand: How much content Google wants to crawl on your site.

Strategies To Optimize Crawl Budget

Optimizing your crawl budget helps Google index your site efficiently. Here are some strategies:

- Fix Crawl Errors: Use Google Search Console to identify and fix errors.

- Optimize Site Structure: Ensure a clean, logical structure for easy navigation.

- Update Content Regularly: Keep content fresh to signal search engines.

- Use Robots.txt Wisely: Block unnecessary pages using robots.txt.

- Reduce Duplicate Content: Ensure unique content on each page to avoid wasting crawl budget.

| Strategy | Description |

|---|---|

| Fix Crawl Errors | Identify and fix issues using Google Search Console. |

| Optimize Site Structure | Ensure a clean, logical structure for easy navigation. |

| Update Content Regularly | Keep content fresh to signal search engines. |

| Use Robots.txt Wisely | Block unnecessary pages using robots.txt. |

| Reduce Duplicate Content | Ensure unique content on each page to avoid wasting crawl budget. |

By optimizing your crawl budget, you help Google prioritize important pages. This can improve your site’s visibility and rankings.

Handling Crawl Errors

Monitoring and improving Google’s crawl rate is essential for better SEO. Crawl errors can hinder your website’s performance. They prevent search engines from indexing your content properly. Addressing these errors promptly ensures search engines can crawl your site efficiently.

Common Crawl Errors

Crawl errors occur when Googlebot fails to access your pages. Identifying these errors is crucial. Here are some common crawl errors:

- 404 Errors: Page not found

- 500 Errors: Server issues

- 403 Errors: Forbidden access

- DNS Errors: DNS server issues

- URL Errors: Incorrect URL structure

Fixing Crawl Errors

Fixing crawl errors is vital for improving your site’s crawl rate. Here are steps to address them:

- Use Google Search Console to identify crawl errors.

- Fix 404 errors by redirecting broken links.

- Resolve 500 errors by checking server configurations.

- Address 403 errors by adjusting access permissions.

- Fix DNS errors by verifying your DNS server settings.

- Correct URL errors by ensuring proper URL structure.

Regularly monitor your website for crawl errors. Addressing them promptly improves your site’s crawl rate and SEO performance.

Best Practices For Sustained Improvement

To maintain and enhance Google’s crawl rate, you need to follow best practices. These practices ensure your website remains visible and performs well in search engines. Let’s explore some of the best methods to achieve sustained improvement.

Regular Site Audits

Conducting regular site audits helps identify issues that may affect your crawl rate. Use tools like Google Search Console and Screaming Frog to find and fix problems.

- Check for broken links and fix them.

- Ensure your website has a proper sitemap.

- Look for duplicate content and remove it.

- Verify that your site is mobile-friendly.

Regular audits help keep your site healthy. A healthy site attracts more frequent crawls.

Continuous Monitoring

Continuous monitoring is crucial for sustained improvement. Set up automated tools to track your site’s performance. Tools like Google Analytics and Ahrefs are beneficial.

- Monitor your server’s response time.

- Track changes in your crawl stats.

- Watch for sudden drops in traffic.

- Identify and fix high-priority errors quickly.

By continuously monitoring, you can address issues as they arise. This proactive approach keeps your site in top condition.

Frequently Asked Questions

What Is Google’s Crawl Rate?

Google’s crawl rate is the frequency at which Googlebot visits and indexes your website. It affects how quickly your new content appears in search results.

How Can I Check My Site’s Crawl Rate?

You can check your site’s crawl rate using Google Search Console. Navigate to the “Crawl” section and review the crawl stats.

Why Is Improving Crawl Rate Important?

Improving crawl rate ensures that Google indexes your content quickly. This boosts your site’s visibility and helps in ranking higher in search results.

What Factors Affect Google’s Crawl Rate?

Several factors affect Google’s crawl rate, including website speed, server response time, and the overall structure of your site.

Conclusion

Improving Google’s crawl rate boosts your website’s visibility. Regularly update content and fix broken links. Ensure your sitemap is accurate and optimized. Monitoring and making necessary adjustments can significantly enhance your site’s performance. Implement these strategies to maintain a healthy and efficient crawl rate.

Your website’s success depends on consistent optimization efforts.