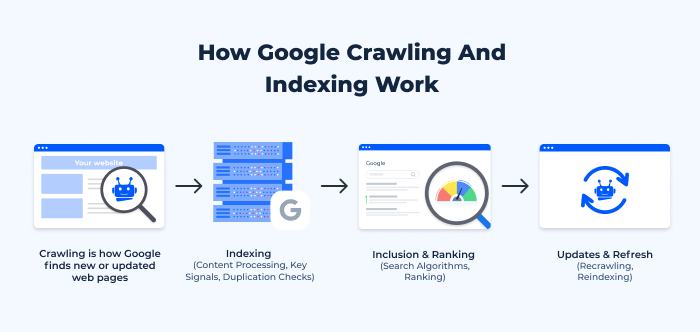

Googlebot crawls the web by following links to discover new content. It indexes this content to make it searchable in Google.

Understanding how Googlebot functions is crucial for optimizing your website. This automated program systematically explores the internet, gathering information on web pages. By following links, Googlebot discovers new content and updates existing pages. Once it collects data, Googlebot indexes it, organizing the information for quick retrieval during searches.

This process affects how your site ranks in search results. Effective SEO strategies ensure that Googlebot can easily access, crawl, and index your content. Knowing the intricacies of this process can significantly enhance your online visibility and improve your site’s performance in search rankings.

Credit: www.sistrix.com

Introduction To Googlebot

Googlebot is the automated program that crawls the web. It collects data from websites. This data helps Google index content for search results. Understanding Googlebot is key for better SEO strategies.

The Birth Of Google’s Crawler

Googlebot started as a simple program in 1994. It was designed to find and index web pages. Over the years, it has evolved significantly. Today, it uses advanced algorithms and technologies.

Initially named “Backrub,” it focused on backlinks. Backlinks helped determine a page’s importance. As the web grew, so did Googlebot’s capabilities.

| Year | Milestone |

|---|---|

| 1994 | Launch of Backrub |

| 1996 | Renamed to Googlebot |

| 2000 | Introduction of PageRank |

| 2021 | Mobile-first indexing |

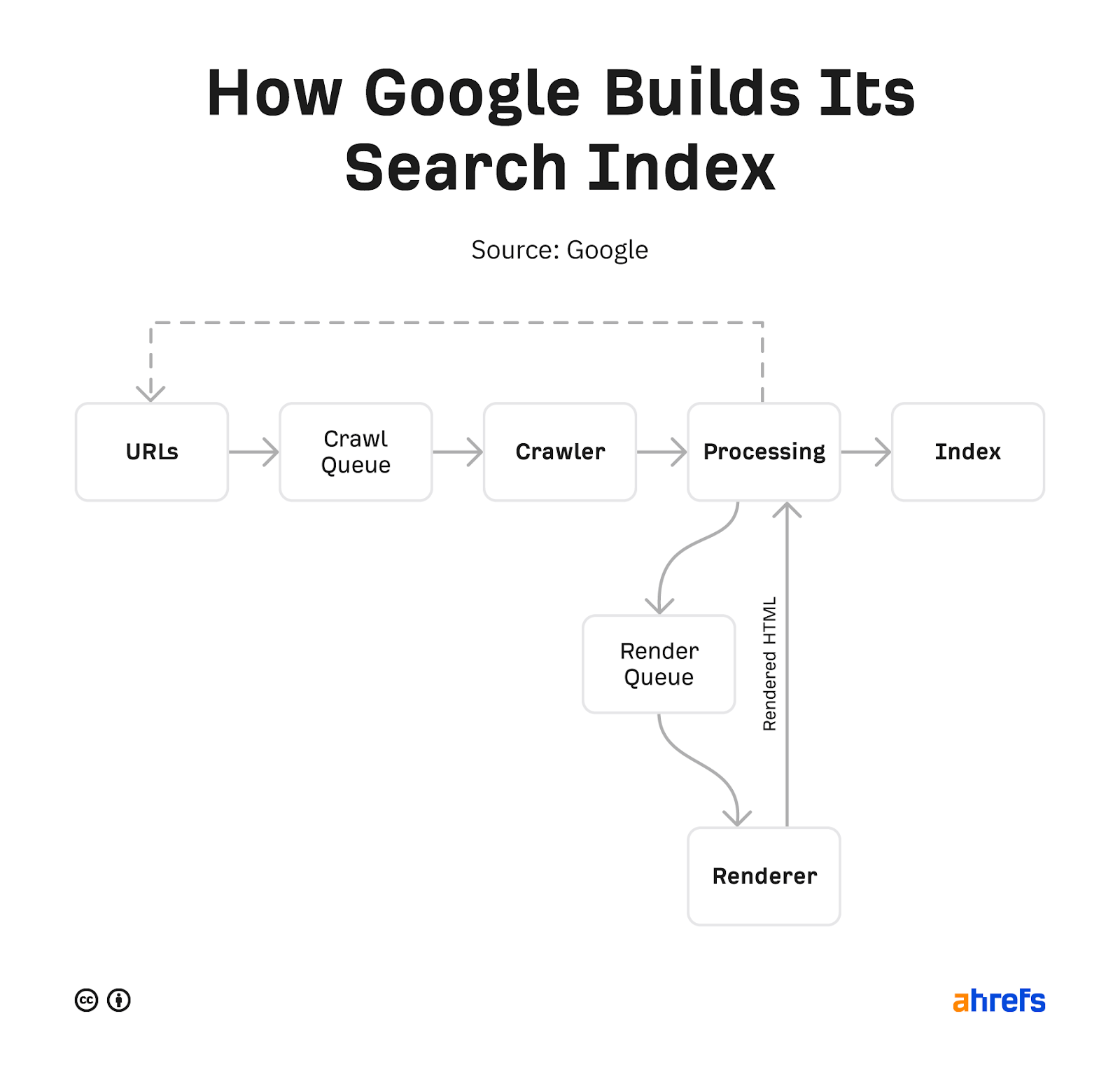

Primary Functions

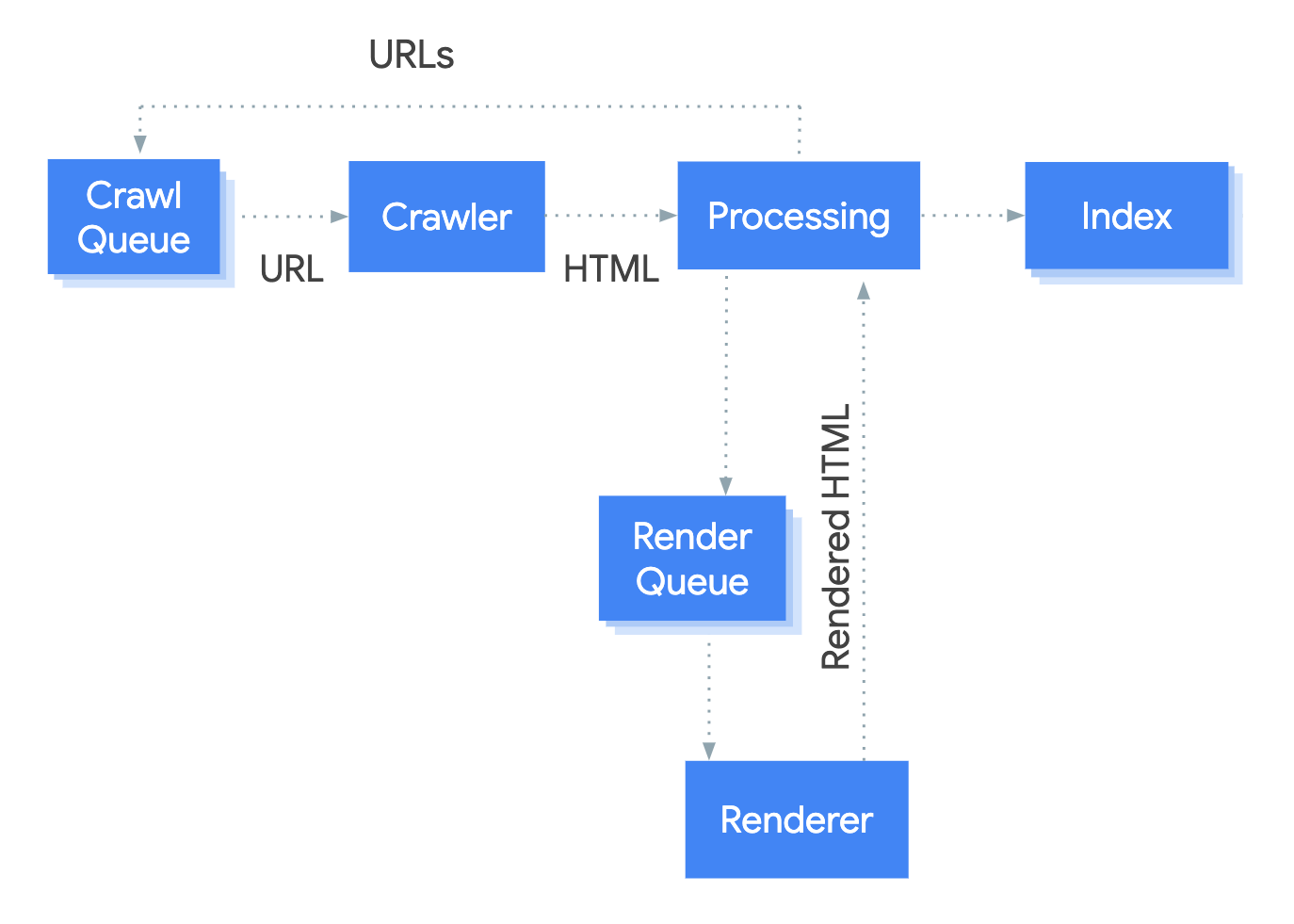

Googlebot has several key functions. These include:

- Crawling: Discovering new and updated pages.

- Indexing: Storing page information for search results.

- Rendering: Understanding how a page looks and behaves.

Crawling involves visiting web pages. Googlebot follows links to find new content. Indexing involves analyzing content. The goal is to categorize it effectively.

Rendering is crucial for modern web pages. It helps Googlebot see how pages appear to users. This includes images, videos, and interactive elements.

Each function plays a vital role in search results. Together, they ensure users find relevant information quickly.

Credit: sitechecker.pro

Crawling: The First Step

Crawling is the initial phase in how Google indexes web content. Googlebot, the web crawler, discovers and examines pages. This process helps Google understand what each site offers. Let’s explore how Googlebot discovers sites and how often it crawls them.

How Sites Are Discovered

Googlebot finds new sites through various methods:

- Links: It follows links from known pages.

- Sitemaps: Webmasters submit sitemaps to guide crawlers.

- Social Media: Content shared on social platforms may attract Googlebot.

- Domain Registration: New domains can be indexed after registration.

These methods help Googlebot efficiently explore the vast web.

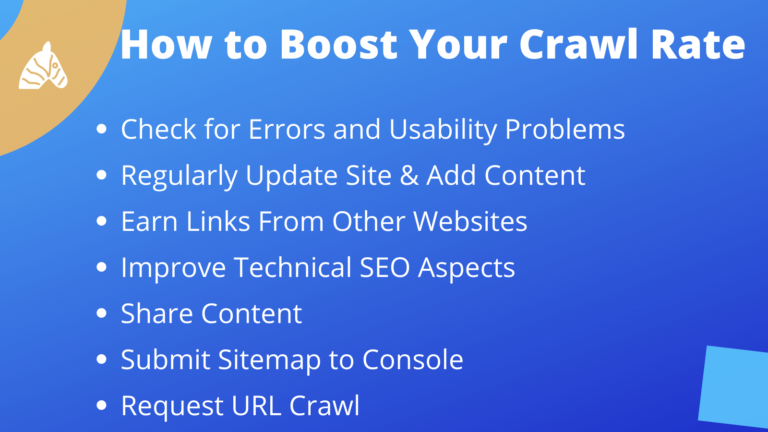

Frequency Of Crawling

The frequency of crawling depends on several factors:

| Factor | Description |

|---|---|

| Site Popularity | High-traffic sites are crawled more often. |

| Update Frequency | Sites with frequent updates attract more crawls. |

| Page Authority | Pages with higher authority get crawled regularly. |

Understanding these factors helps webmasters optimize their site for better indexing.

The Indexing Process

The indexing process is crucial for how Google organizes web content. After Googlebot crawls a page, it needs to store the data effectively. This allows users to find relevant information quickly. Understanding this process helps site owners improve their visibility.

From Raw Data To Searchable Index

After crawling, Googlebot processes raw data. This data includes:

- Text on the page

- Images

- Videos

- Links

Google uses sophisticated algorithms to analyze the data. Key steps in this process include:

- Extracting content and keywords

- Understanding the context

- Assigning a rank based on relevance

The processed data is then stored in a massive database called the index. This index allows Google to return results quickly.

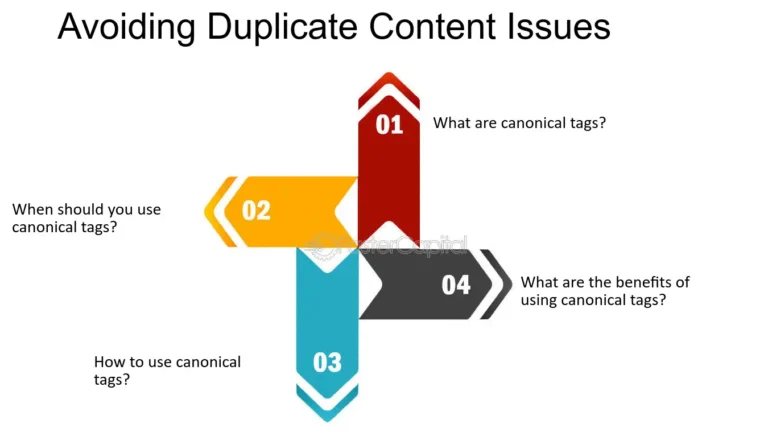

Challenges In Indexing

Indexing is not always straightforward. Several challenges can arise:

- Duplicate content: Multiple pages with similar information confuse Google.

- Dynamic content: Pages that change frequently may not get indexed properly.

- Blocked resources: Some sites prevent Google from accessing content.

- Technical issues: Errors in the site’s code can hinder indexing.

Site owners can resolve these issues. They can use tools like Google Search Console to monitor indexing status.

Understanding The Algorithm

Google’s algorithm is a complex system. It decides which pages to show in search results. Many factors influence this system. Knowing these factors helps improve your website’s visibility.

Ranking Factors

Ranking factors are crucial for SEO. They determine how Google ranks a page. Here are some key factors:

- Content Quality: High-quality, relevant content ranks better.

- Keywords: Proper use of keywords boosts visibility.

- Mobile-Friendliness: Mobile-optimized sites perform better.

- Page Speed: Faster pages lead to better rankings.

- Backlinks: Quality backlinks improve trust and authority.

- User Experience: Good design and easy navigation help rankings.

Algorithm Updates

Google frequently updates its algorithm. These updates can change how rankings work. Here are some significant updates:

| Update Name | Year | Description |

|---|---|---|

| Panda | 2011 | Focuses on content quality and relevance. |

| Penguin | 2012 | Targets spammy backlinks and manipulative practices. |

| Hummingbird | 2013 | Improves understanding of search queries. |

| RankBrain | 2015 | Uses AI to better interpret search intent. |

| BERT | 2019 | Enhances understanding of natural language. |

Stay updated with these changes. Understanding them helps in optimizing your site.

Seo Strategies For Success

Understanding Google’s crawling and indexing process is vital. Implementing effective SEO strategies leads to better visibility. Two core areas are content quality and technical SEO. Focus on these to enhance your site’s performance.

Content Is King

High-quality content attracts both users and Googlebot. Create engaging, informative, and relevant content.

- Keyword Research: Identify relevant keywords for your audience.

- Value-Driven Content: Provide solutions and answers.

- Regular Updates: Refresh content to keep it current.

- Use Media: Include images and videos for engagement.

Content should be original. Plagiarized content can harm your ranking. Aim for at least 1,000 words per page. This length allows for depth and detail.

Technical Seo Essentials

Technical SEO ensures that Google can crawl your site easily. Follow these essential practices:

- Mobile Optimization: Ensure your site works well on mobile devices.

- Page Speed: Optimize images and reduce server response time.

- XML Sitemap: Create and submit a sitemap to Google.

- Secure Website: Use HTTPS for better security.

Monitor your website’s performance regularly. Use tools like Google Search Console. Fix any crawl errors promptly. This will improve your site’s visibility.

| SEO Element | Importance |

|---|---|

| Content Quality | High |

| Mobile Optimization | High |

| Page Speed | Medium |

| Secure Website | High |

| XML Sitemap | Medium |

Implementing these strategies boosts your SEO. Stay updated with the latest SEO trends. Adapt your strategies as needed. This helps maintain your site’s ranking.

Mobile-first Indexing

Mobile-First Indexing means Google uses the mobile version of a website for indexing. Most users access websites through mobile devices. This change helps Google provide better search results.

Impact On Rankings

Mobile-First Indexing affects how websites rank in search results. Here are key impacts:

- Mobile-friendly sites rank higher.

- Desktop-only sites may drop in rankings.

- Page load speed on mobile is critical.

Websites need to ensure mobile usability. Google checks site performance on mobile first. Poor mobile performance can hurt visibility.

Best Practices

To optimize for Mobile-First Indexing, follow these best practices:

- Use responsive design for your website.

- Ensure all content is accessible on mobile.

- Optimize images for faster loading.

- Keep navigation simple and clear.

- Test your site using Google’s Mobile-Friendly Test tool.

Regular updates help maintain mobile performance. Always monitor user experience on mobile devices.

Common Myths Around Googlebot

Many people have misunderstood how Googlebot works. This leads to confusion. Let’s clear up some common myths about Googlebot.

Misconceptions

- Googlebot sees everything: Some think Googlebot can view all content.

- Googlebot is a human: Many believe Googlebot behaves like a person.

- All sites are crawled equally: Some assume every site is treated the same.

- Meta tags are the only way to rank: Many think meta tags control rankings entirely.

The Truth Behind The Myths

Googlebot does not see everything. It only accesses pages that are linked. If a page has no links, Googlebot cannot find it. This is a major reason why internal linking is important.

Googlebot is not human. It is a program that follows rules. It does not interpret content like a person. It uses algorithms to understand data.

Not all sites are crawled equally. Popular sites get crawled more often. New or smaller sites may take longer. This is based on the site’s authority and relevance.

Meta tags do help, but they aren’t everything. Google looks at many factors. Content quality, backlinks, and user experience matter too.

| Myth | Truth |

|---|---|

| Googlebot sees everything | It only sees linked content. |

| Googlebot is human | It is a program, not a person. |

| All sites are crawled equally | Popular sites get priority. |

| Meta tags control ranking | Many factors affect ranking. |

Tools And Resources

Understanding how Googlebot crawls and indexes websites can help you optimize your site. Utilizing the right tools and resources is essential. They provide insights and data to improve your website’s visibility.

Google’s Webmaster Tools

Google’s Webmaster Tools, now known as Google Search Console, is essential for webmasters. It offers a variety of features to help monitor your site’s performance.

- Performance Reports: Track clicks, impressions, and rankings.

- Coverage Reports: Identify crawling errors and index issues.

- Sitemaps: Submit and manage your XML sitemaps.

- Mobile Usability: Check for mobile-friendly design issues.

Use these features to optimize your site. They help Googlebot understand your content better.

Third-party Seo Tools

Many third-party SEO tools can enhance your SEO strategy. They provide valuable data for optimizing your website.

| Tool Name | Primary Features |

|---|---|

| SEMrush |

|

| Ahrefs |

|

| Moz |

|

Choose tools that fit your needs. They can provide insights to boost your website’s performance.

The Future Of Googlebot And Seo

The future of Googlebot holds exciting changes for SEO. As technology evolves, so does Google’s approach to crawling and indexing. Understanding these shifts is key for marketers and webmasters. Staying informed will help optimize websites effectively.

Emerging Trends

Several trends are shaping the future of Googlebot and SEO:

- AI Integration: Googlebot will use artificial intelligence for smarter crawling.

- Voice Search Optimization: More users rely on voice search. Content will need to adapt.

- Mobile-First Indexing: Mobile usability becomes crucial. Websites must perform well on mobile devices.

- Core Web Vitals: Page experience metrics will impact rankings. Speed and user experience matter.

- Structured Data: Markup helps Google understand content. This can improve visibility in search results.

Staying Ahead In The Game

To stay ahead in SEO, consider these strategies:

- Regularly update content for relevance.

- Optimize for mobile devices.

- Enhance page speed and user experience.

- Implement structured data markup.

- Focus on long-tail keywords for voice search.

Using these techniques will help maintain a competitive edge. Keeping an eye on Googlebot’s updates is essential. Adapting quickly to changes will lead to better search rankings.

Credit: ahrefs.com

Frequently Asked Questions

How Does Googlebot Crawl Websites?

Googlebot uses algorithms to discover and index web pages. It follows links from one page to another, analyzing content along the way. This process helps Google understand the relevance and quality of each page. Regular crawling ensures that search results are up-to-date and relevant for users.

What Is The Purpose Of Google Indexing?

Google indexing organizes and stores web content for quick retrieval. When a user searches, indexed pages are displayed based on relevance. This process helps Google determine which pages to show for specific queries. Efficient indexing improves search accuracy and user experience significantly.

How Often Does Googlebot Crawl My Site?

The frequency of crawling depends on several factors. These include site authority, update frequency, and overall quality. Websites with fresh content may be crawled more often. Typically, Googlebot may visit high-quality sites daily, while others might be crawled weekly or monthly.

What Factors Affect Googlebot Crawling?

Several factors influence how Googlebot crawls your site. Site speed, structure, and internal linking play crucial roles. Additionally, the robots. txt file can restrict crawling. Quality content and regular updates also encourage Googlebot to visit more frequently.

Conclusion

Understanding how Googlebot operates is essential for optimizing your website. By grasping the crawling and indexing process, you can improve your site’s visibility. Implementing best practices will help you stay ahead of the competition. Keep your content fresh and relevant to ensure Googlebot finds and ranks your pages effectively.