To prevent Google from indexing certain pages, use the “noindex” meta tag or configure your robots.txt file for not google indexing. These methods effectively signal to search engines which pages to ignore.

Webmasters often need to control which pages appear in search results. Keeping certain content private or irrelevant to users can enhance a website’s focus. Search engines may index all pages by default, leading to unwanted exposure. Applying the right techniques is crucial for maintaining a clean online presence.

Using the “noindex” meta tag allows specific pages to be excluded from search results. Alternatively, the robots. txt file can block entire directories or specific files. Understanding these tools helps improve site management and SEO strategy. Clear guidelines ensure that only the most relevant pages reach your audience.

Credit: www.ismartcom.com

Introduction To Google Indexing

Google indexing is a vital part of how search engines work. It helps Google understand the content on your website. Indexed pages appear in search results. Pages not indexed remain hidden from users. Managing which pages get indexed is crucial for effective SEO.

The Role Of Indexing In Seo

Indexing plays a key role in search engine optimization (SEO). Here are some important points:

- Visibility: Indexed pages show up in search results.

- Ranking: Google ranks indexed pages based on relevance.

- Traffic: More indexed pages can lead to more visitors.

- Content Understanding: Indexing helps Google grasp your content.

Reasons To Restrict Page Indexing

Sometimes, you may want to restrict indexing. Here are common reasons:

- Duplicate Content: Prevent search engines from indexing similar pages.

- Private Information: Keep sensitive data away from public view.

- Low-Quality Pages: Exclude pages that offer little value.

- Temporary Content: Hide seasonal or promotional pages.

Understanding these reasons helps you manage your site’s visibility. Use the right methods to control what Google indexes.

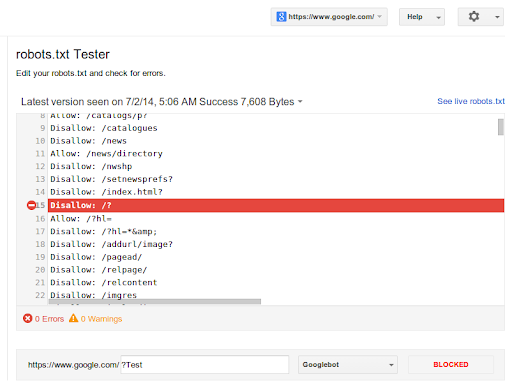

Using Robots.txt File To Control Access

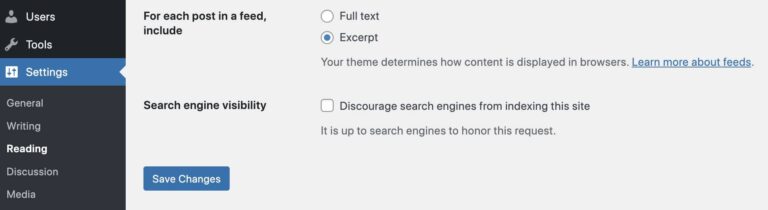

The robots.txt file is essential for managing web crawlers. It tells search engines which pages to index and which to ignore. By setting up this file correctly, you can control access to specific areas of your website.

Creating And Editing Robots.txt

Creating a robots.txt file is simple. Follow these steps:

- Open a text editor like Notepad or TextEdit.

- Start with the user-agent line.

- Define which pages to allow or disallow.

- Save the file as robots.txt.

Here’s a basic example:

User-agent: Disallow: /private/ Allow: /public/

This example tells all crawlers to avoid the /private/ directory but allows access to /public/.

Common Mistakes To Avoid

A few common mistakes can harm your SEO. Be cautious:

- Using incorrect syntax can lead to errors.

- Blocking essential pages may reduce visibility.

- Forgetting to update the file after site changes.

- Not testing the file with tools like Google’s Robots Testing Tool.

Always review your robots.txt file regularly. Keep it updated to reflect your current website structure.

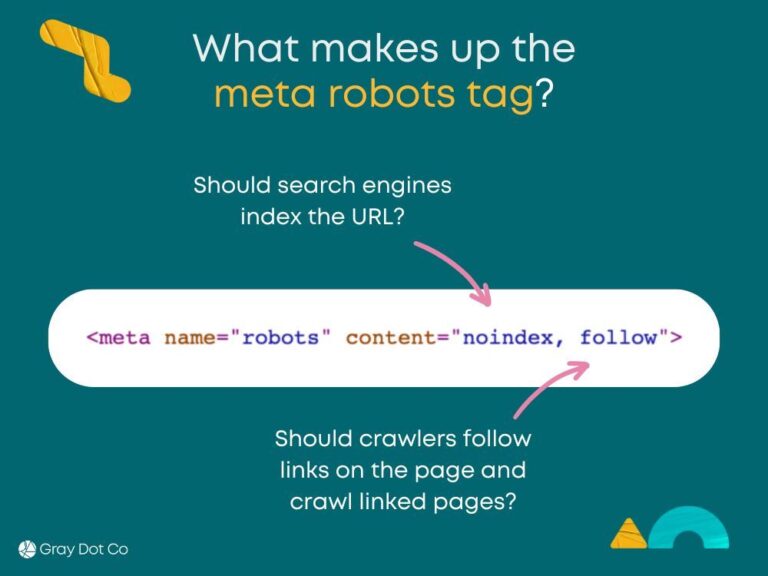

Meta Tags For Page-level No-index

Using meta tags helps control which pages Google indexes. The no-index tag tells search engines not to include a page in search results. This method is effective for preventing unwanted pages from showing up online.

Implementing No-index Meta Tags

To implement a no-index meta tag, follow these steps:

- Open the HTML file of the page.

- Locate the section.

- Add the following line of code:

After adding the line, save the file. Upload it to your server. This tells search engines to skip this page during indexing.

Meta Tags Vs. Robots.txt

Both meta tags and robots.txt control indexing. Here’s how they differ:

| Feature | Meta Tags | Robots.txt |

|---|---|---|

| Control Level | Page-specific | Site-wide |

| Ease of Use | Simple to add | Requires file editing |

| Search Engine Response | Directly instructs | Guides crawling |

Use meta tags for specific pages. Use robots.txt for broader control. This combination ensures optimal management of your website’s visibility.

Password Protection And User Authentication

Password protection is vital for keeping certain pages private. It prevents unauthorized access. User authentication adds an extra layer of security.

Together, these methods help control who sees your content. They also stop search engines from indexing these pages.

Setting Up Passwords For Private Pages

Setting up passwords is simple. Follow these steps:

- Access your website’s dashboard.

- Navigate to the page you want to protect.

- Look for the “Visibility” options.

- Select “Password Protected.”

- Enter a strong password.

- Save your changes.

Use a mix of letters, numbers, and symbols. This makes it harder for others to guess.

Impact On User Experience

Password protection affects user experience positively and negatively.

| Positive Impact | Negative Impact |

|---|---|

| Enhances security for sensitive information. | May frustrate users who forget passwords. |

| Gives control over who can view content. | Requires extra steps to access pages. |

| Encourages trust among users. | Can reduce site traffic if users are locked out. |

Weigh these impacts carefully. Ensure a balance between security and user convenience.

Leveraging The Canonical Tag

The canonical tag is a powerful tool. It helps control how search engines index your pages. Use it wisely to prevent duplicate content issues. This keeps your site clean and boosts SEO.

Canonical Tag Explained

The canonical tag is an HTML element. It tells search engines which version of a page to index. Here’s how it looks:

Place this tag in the section of your HTML. Use it on pages with similar content. This prevents confusion for search engines.

Preventing Duplicate Content Issues

Duplicate content can harm your site’s SEO. Here are some key points:

- Identify similar or duplicate pages.

- Choose the best version to represent.

- Implement the canonical tag on all duplicates.

By doing this, you signal to search engines. It reduces the risk of penalties. Keep your website healthy and rankings high.

Use the canonical tag for:

- Product variations in e-commerce.

- Different URLs for the same content.

- Content syndication across multiple sites.

Implementing the canonical tag correctly protects your content. It ensures your site remains the top choice for indexing.

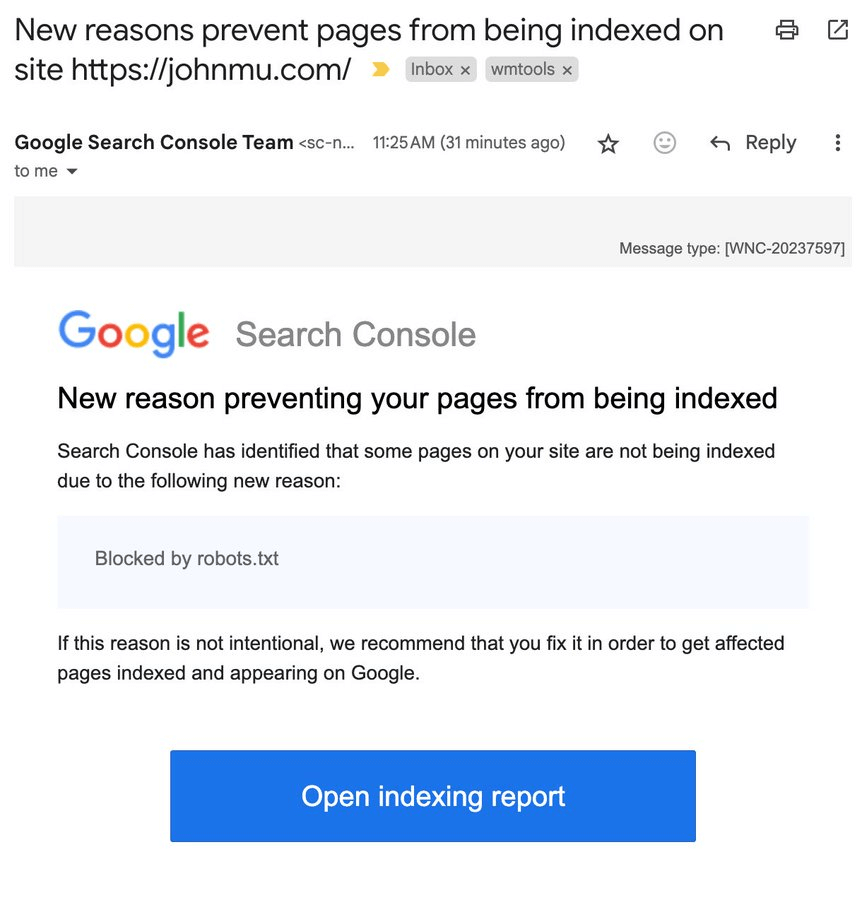

Using Google Search Console Removal Tool

The Google Search Console Removal Tool helps control indexed pages. You can temporarily or permanently remove URLs from search results. This tool is essential for managing your site’s visibility.

Temporary Vs Permanent Removal

Understanding the difference between temporary and permanent removal is crucial.

| Type | Description | Duration |

|---|---|---|

| Temporary Removal | Hides the URL from search results. | Lasts for about 6 months. |

| Permanent Removal | Removes the URL from indexing. | Effective until the URL is re-submitted. |

Steps For Requesting Url Removal

Follow these steps to request URL removal:

- Sign in to your Google Search Console account.

- Select your property from the dashboard.

- Click on Removals in the left menu.

- Click on the New Request button.

- Enter the URL you want to remove.

- Choose between Temporary or Permanent removal.

- Submit your request.

Check the status of your request in the Removals section.

X-robots-tag For Non-html Files

The X-Robots-Tag is a powerful tool. It helps control how search engines index files. This tag works for non-HTML files like PDFs and images. Use it to protect sensitive data and manage SEO.

Configuring Http Headers

Configuring the X-Robots-Tag requires setting HTTP headers. This can be done in various ways:

- Using server configuration files (like .htaccess).

- Through content management systems (CMS).

- Via web hosting control panels.

Here is an example of how to set the header using .htaccess:

Header set X-Robots-Tag "noindex, nofollow"This code tells search engines not to index the file. You can replace “noindex, nofollow” with other directives as needed.

Use Cases For X-robots-tag

The X-Robots-Tag has many practical uses:

- Restricting access to sensitive files: Protect confidential documents.

- Avoiding duplicate content: Prevent indexing of similar files.

- Managing image SEO: Control how images are indexed.

Here’s a quick table showing some directives:

| Directive | Description |

|---|---|

| noindex | Do not show the file in search results. |

| nofollow | Do not follow links in the file. |

| noarchive | Do not save a cached copy of the file. |

Using the X-Robots-Tag effectively can enhance your site’s SEO strategy. It helps you keep control over what search engines see.

Credit: www.areaten.com

Advanced Methods And Considerations

Preventing Google from indexing certain pages requires careful planning. Use advanced methods for effective exclusions. Understand how to maintain your site structure while ensuring Google respects your preferences.

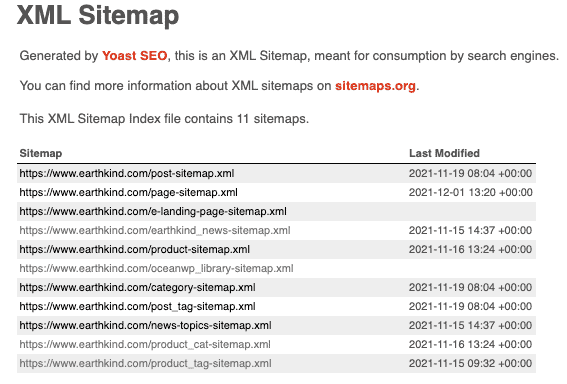

Utilizing Sitemaps

Sitemaps guide search engines. They show which pages to index. Exclude unwanted pages to control visibility.

Follow these steps to utilize sitemaps:

- Generate an XML sitemap.

- Include only desired pages.

- Submit the sitemap to Google Search Console.

Consider adding a noindex directive to the pages you want to exclude. This informs search engines not to index those pages.

Maintaining Website Structure During Exclusions

Maintaining a clear website structure is essential. Excluding pages should not confuse users or search engines.

- Keep a logical hierarchy.

- Use clear navigation menus.

- Regularly update your sitemap.

For better organization, consider using a table:

| Page Type | Indexing Status | Reason for Exclusion |

|---|---|---|

| Privacy Policy | Noindex | Not relevant for search |

| Admin Pages | Noindex | Internal use only |

| Test Pages | Noindex | Not finalized |

This structure helps both visitors and search engines. Ensure all pages serve a purpose. Avoid cluttering your site with unnecessary pages.

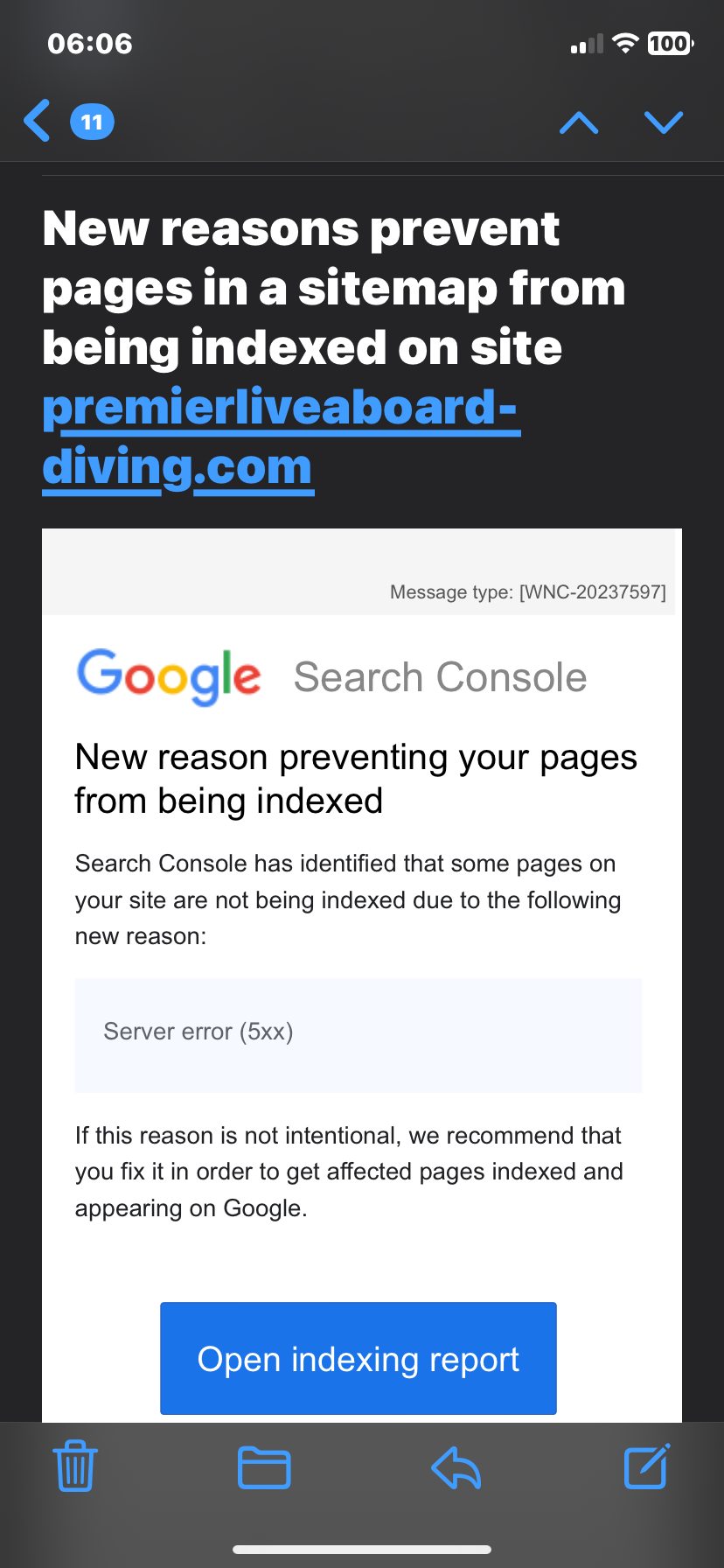

Monitoring And Testing Your Exclusion Settings

Keeping track of your exclusion settings is vital. It ensures Google does not index unwanted pages. Regular monitoring helps catch any issues early. Testing these settings gives you peace of mind.

Tools For Indexing Status Checks

Use these tools to monitor your site’s indexing status:

- Google Search Console: This tool shows indexed pages.

- Bing Webmaster Tools: Offers insights on Bing indexing.

- Screaming Frog SEO Spider: Crawls your site for issues.

- Sitebulb: Identifies indexing problems with a detailed report.

Troubleshooting Indexing Issues

If you notice unwanted pages indexed, take action quickly. Here are steps to troubleshoot:

- Check Robots.txt: Ensure the correct pages are disallowed.

- Inspect Meta Tags: Verify that

is present. - Use URL Removal Tool: Remove specific URLs in Google Search Console.

- Review Sitemap: Ensure it only includes pages you want indexed.

Regular checks prevent unwanted indexing. Keeping your site clean is essential for SEO.

Credit: community.cloudflare.com

Best Practices And Common Pitfalls

Preventing Google from indexing certain pages is crucial for privacy. Use the right methods to ensure sensitive information stays hidden. Follow best practices and avoid common mistakes for effective results.

Balancing Accessibility And Privacy

Finding the right balance is key. You want your important pages visible but not indexed. Here are some strategies:

- Use Robots.txt: Block specific pages easily.

- Noindex Tag: Add this tag to pages you want hidden.

- Secure Directories: Use passwords for sensitive areas.

Remember, too much blocking can limit your site’s visibility. Ensure essential pages remain accessible to users. Evaluate what needs protection versus what can be public.

Regularly Updating Your Exclusion Protocols

Websites change often. Regular updates keep your exclusion protocols effective. Follow these steps:

- Review your Robots.txt file monthly.

- Check Noindex tags on important pages.

- Audit your site’s content for sensitive information.

Common pitfalls include:

| Common Pitfalls | Consequences |

|---|---|

| Not updating protocols | Old pages may still be indexed |

| Over-blocking | Missing traffic on key pages |

| Ignoring user feedback | Potential loss of audience engagement |

Stay proactive. Regular updates can help maintain the right balance.

Frequently Asked Questions

How Can I Block Google From Indexing A Page?

You can block Google from indexing a page by using the “noindex” meta tag. Add this tag in the HTML header of the page you wish to exclude. Additionally, you can use the robots. txt file to restrict access for search engines.

This ensures that specific pages remain unindexed.

What Is A Robots.txt File?

A robots. txt file is a text file that instructs search engine crawlers which pages to avoid. It resides in your website’s root directory. By configuring this file, you can prevent indexing of certain pages. However, be cautious, as misconfigurations can lead to unintended blocking.

Does Noindex Affect Seo Rankings?

Yes, using a “noindex” tag can impact your SEO rankings. It prevents search engines from displaying the page in search results. Consequently, any SEO value from that page won’t contribute to your overall ranking. Use it wisely to maintain your site’s credibility and focus on valuable content.

Can I Hide Pages From Google Without Coding?

Yes, you can hide pages from Google without coding. Use content management systems (CMS) that offer built-in options. Many platforms provide settings to set a page as “private” or “noindex. ” This makes it easier to manage your site’s visibility without technical knowledge.

Conclusion

Preventing Google from indexing certain pages is essential for maintaining your website’s focus. Use the right methods, such as robots. txt files and meta tags, to control visibility. Regularly audit your site to ensure sensitive content remains hidden. This proactive approach helps improve your site’s overall SEO performance and user experience.