A crawl delay is a directive used to control search engine bot access speed to a website. It can be configured in the robots.txt file.

Navigating the digital landscape requires understanding the delicate balance between accessibility and site performance. Search engines use bots to crawl websites, indexing new and updated content. Yet, unchecked bot traffic can strain server resources, leading to slow load times or even downtime.

That’s where configuring a crawl delay comes into play. This adjustment helps manage the frequency at which bots visit your site, ensuring a smoother operation without compromising the visibility and timely indexing of your content. Mastering this setting can significantly enhance user experience and improve site health, making it a critical aspect for webmasters and SEO strategists alike. It’s a strategic move to maintain optimal site functionality while staying prominent on search engine results pages.

Introduction To Crawl Delay

Imagine a world where digital librarians visit your website. They need time to understand your content. Crawl delay is that time. It’s a rule for these visitors, known as web crawlers. It tells them how long to wait between loading pages. This delay helps manage your website’s load. Let’s explore this further.

The Role Of Web Crawlers

Web crawlers, also known as spiders or bots, scan websites. They index information for search engines. A crawl delay sets the pace for their visits. Proper crawler management is key. It ensures your content shines in search results.

Impact On Website Performance

A website’s speed is crucial. Too many crawler requests can slow it down. This affects user experience. Setting an optimal crawl delay is important. It balances performance and visibility. A table can help illustrate this balance:

| Crawler Visits | Performance | User Experience |

|---|---|---|

| Too Frequent | May Slow Down | Can Deter Users |

| Optimized Frequency | Stable | Enhanced |

| Too Infrequent | Underindexed | Less Discoverable |

Credit: rud.is

Crawl Delay Fundamentals

Let’s dive into the Crawl Delay Fundamentals. This concept is vital for website owners. It helps manage how search engines visit your site. Understanding it can improve your site’s health and SEO.

Defining Crawl Delay

Crawl delay is a rule. It tells search engine bots how long to wait between page crawls. This delay is crucial for site performance. Sites specify this in the robots.txt file. A proper delay ensures your site runs smoothly for visitors.

How Crawl Delay Works

Crawl delay operates through the robots.txt file. Here’s a simple step-by-step guide:

- Locate your site’s

robots.txtfile. - Add the

Crawl-delaydirective. - Specify the delay in seconds.

For example:

User-agent:

Crawl-delay: 10This tells all bots to wait 10 seconds between requests. The goal is to balance crawl rate and site performance. Not all search engines honor this request. But many do, including Bing and Yandex.

| Search Engine | Respects Crawl-delay? |

|---|---|

| Bing | Yes |

| Yandex | Yes |

| No |

Remember: Not all bots follow this rule. Google, for instance, uses its algorithms. It decides how to crawl sites. Still, setting a crawl delay is good practice. It can help with other search engines.

The Importance Of Crawl Delay

Crawl delay is a rule for search engines. It tells how often a bot should visit a page. This delay protects your site from overload. It ensures bots don’t slow down your website. Let’s explore why setting a crawl delay matters for site owners.

Benefits For Website Owners

Setting the right crawl delay brings several benefits:

- Controls server load and prevents crashes.

- Manages bot traffic to keep site performance high.

- Helps small server resources last longer.

Improving User Experience

User experience improves with crawl delay. Visitors enjoy faster loading pages. They face fewer errors. A good experience keeps them coming back.

Credit: rankmath.com

Assessing Your Need For Crawl Delay

Search engines use web crawlers to index content. Sometimes, frequent crawling can strain server resources. Crawl delay settings help manage this. Let’s see if your site needs crawl delay.

Analyzing Website Traffic

Begin by checking your site’s traffic. Use analytics tools for this task. Look for patterns in visitor spikes. High traffic might need crawl delay adjustments.

- Peak times: Note the busiest hours.

- Visitor numbers: Count daily and monthly users.

- Page views: Tally views for insight on content indexing.

Server Load Considerations

Next, assess server performance. High load times indicate stress. Crawl delay can ease this.

| Server Statistic | Consideration |

|---|---|

| Uptime | Strive for 99.9% or better. |

| Response Time | Keep under 200 ms. |

| Load Time | Aim for 1-3 seconds. |

Slow server responses suggest a need for crawl delay. Adjust settings to balance traffic and crawling.

Setting Up Crawl Delay

Understanding how to set up a crawl delay is crucial. It helps manage how often search engine bots visit your site. This can prevent your site from being overwhelmed. Let’s dive into the steps to set up a crawl delay.

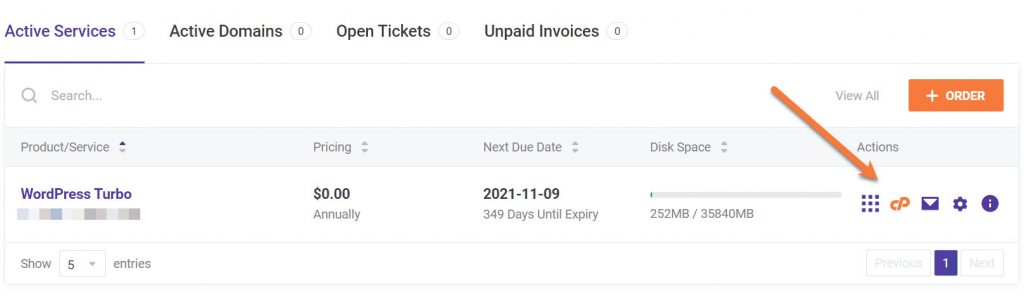

Editing The Robots.txt File

To set up a crawl delay, start with your robots.txt file. This file talks to web crawlers. It tells them what they can and cannot do on your site.

- Find your site’s robots.txt file. It’s usually in the root directory.

- Edit the file by adding

User-agent:. This means all bots. Then addCrawl-delay: 10. The number is the delay in seconds. - Save your changes. It tells bots to wait 10 seconds between page requests.

Note: Not all search engines obey crawl delay. Googlebot uses its own settings.

Best Practices For Configuration

Setting the right crawl delay is key. Too short can overwhelm your site. Too long might hurt your SEO.

- Test different delays to find what works best for your site.

- Use Webmaster Tools to monitor crawl rate and site performance.

- Update your robots.txt file if you change your site’s content often.

Remember, the goal is to balance bot traffic and site performance.

Credit: www.youtube.com

Crawl Delay And Seo

Crawl Delay and SEO are critical for website performance. Search engines use bots to crawl sites. This process updates their index. A crawl delay tells bots how long to wait before loading a new page. This waiting time affects how often bots visit your site. Let’s explore its impact on SEO.

Impact On Search Engine Rankings

Crawl delay can influence rankings. It’s a balance. Too long a delay might mean less frequent updates in search results. A shorter delay could overwhelm your server. This could lead to site downtime. Both scenarios can harm your search engine visibility.

Balancing Crawl Rate And Indexing

Setting the right crawl delay is vital. A good balance ensures steady indexing without server overload. Use the robots.txt file to set this delay. Find the sweet spot. It keeps your content fresh for search engines. It also maintains site performance.

Here is an example of setting a crawl delay:

User-agent:

Crawl-Delay: 10This code in robots.txt sets a 10-second delay for all bots.

Monitoring The Effects Of Crawl Delay

Monitoring the Effects of Crawl Delay is crucial for website owners. It helps maintain site health. A crawl delay setting controls how often search engine bots visit your site. You want to balance between good indexing and server overload. After setting a crawl delay, you must watch how it affects your site.

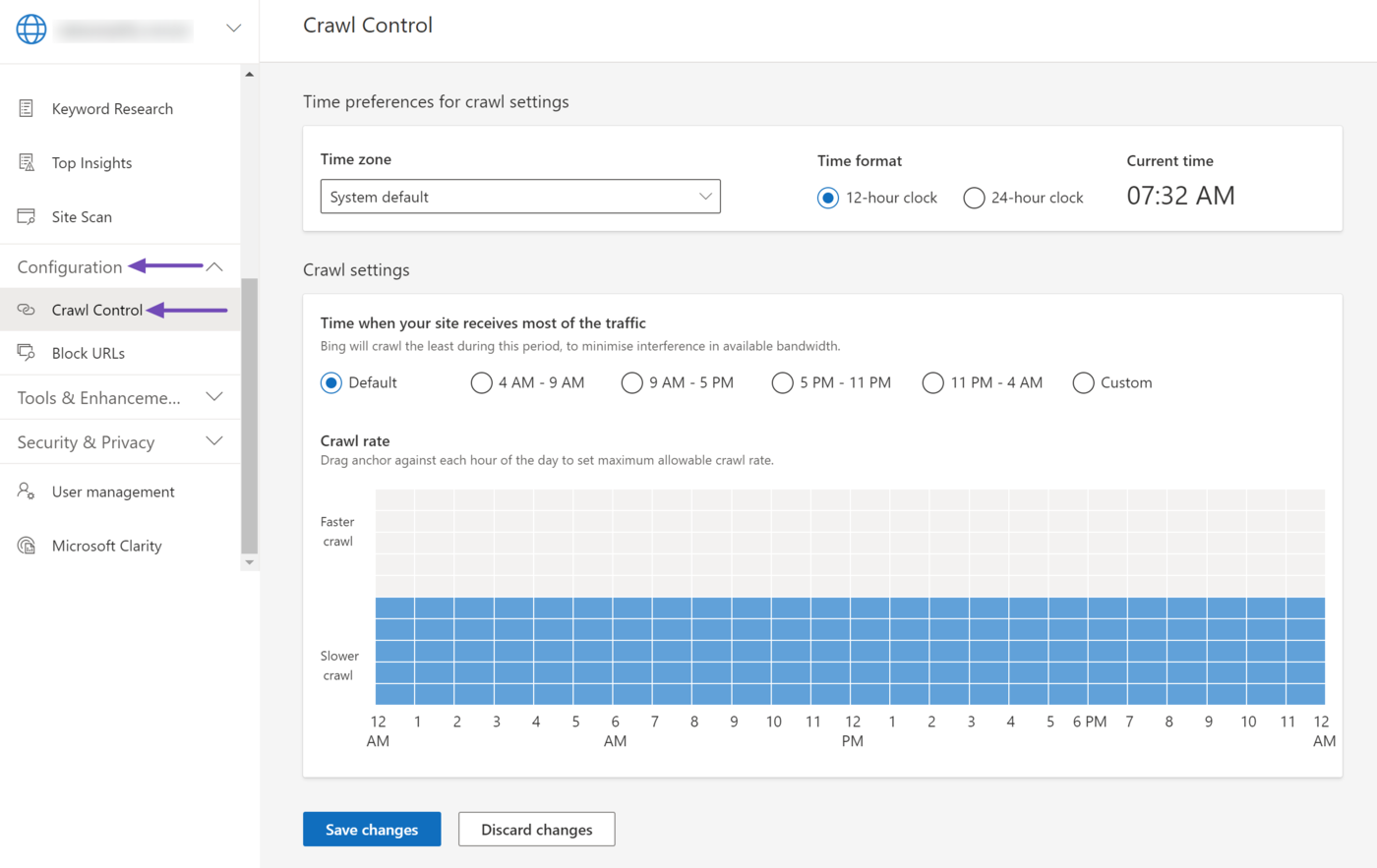

Tools For Tracking Crawler Behavior

Several tools can show you how bots interact with your site:

- Google Search Console: Offers reports on Googlebot’s activity.

- Bing Webmaster Tools: Provides data on Bingbot crawl rates.

- Server Logs: They give detailed access records.

- Bot Tracking Software: Specialized tools for monitoring various bots.

Use these tools to see if bots respect the crawl delay. Check for any unusual activity. Look for patterns that might suggest problems.

Evaluating Site Performance Post-setup

After setting the crawl delay, observe your site’s performance.

- Check page loading times.

- Review any changes in search engine rankings.

- Monitor user experience metrics.

Compare the data from before and after the crawl delay setup. See if there are improvements. Watch for slower page loads or ranking drops. These could mean your delay is too high.

Adjust the crawl delay if needed. This ensures your site stays fast and ranks well.

Troubleshooting Common Crawl Delay Issues

Troubleshooting Common Crawl Delay Issues can be critical for website health. Crawl delay rules help manage how search engines access your site. But, sometimes things go wrong. Let’s tackle common problems and fixes.

Dealing With Over-crawling

Over-crawling happens when search engines visit too often. This can slow down your website. Your server might even crash. To prevent this:

- Adjust the crawl rate in your search engine’s webmaster tools.

- Update your

robots.txtfile with specific crawl-delay instructions. - Monitor server logs to identify any spikes in crawl activity.

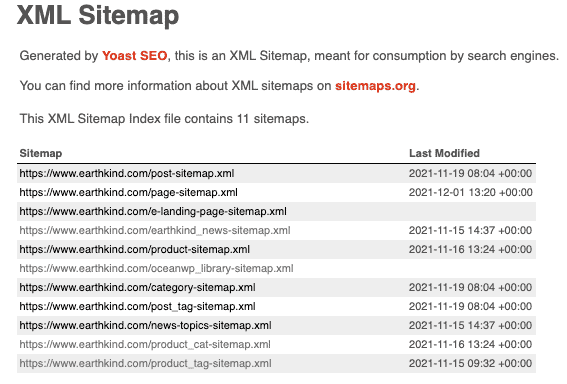

Resolving Under-crawling Problems

Under-crawling means search engines don’t visit often enough. Your content stays unindexed. To fix this:

- Check

robots.txtfor any unintended blockages. - Submit a sitemap to search engines to highlight content.

- Ensure your website has a good internal linking structure.

Advanced Crawl Delay Tactics

Advanced Crawl Delay Tactics help manage how search bots access your site. This balance ensures your site remains fast for users while bots index content.

Dynamic Crawl Delay Strategies

Dynamic crawl delay adjusts based on site traffic. It prevents server overload during peak times. Use server logs to track busy periods. Adjust crawl rates in your robots.txt file accordingly.

Crawl Delay For Large-scale Websites

Large websites face unique challenges. A static crawl delay won’t suffice. Implement dynamic crawl delay. This tailors bot access to current server load and visitor traffic.

Consider these steps:

- Monitor server performance: Use tools to watch load times.

- Analyze peak hours: Identify when your site gets the most visits.

- Customize robots.txt: Use ‘Crawl-delay’ directive for bot timing.

For e-commerce sites, set shorter delays off-peak. For content-heavy sites, increase the delay when updating many pages.

Remember:

- Not all search engines honor ‘Crawl-delay’.

- Changes in robots.txt need time to take effect.

- Regularly review crawl stats in webmaster tools.

Crawl Delay’s Future

As search engines evolve, so does the way they interact with websites. The crawl delay setting is a directive that webmasters can use to control how often a search engine’s bot visits their site. It’s a way to manage the load on a server caused by the crawling process. Looking into the future, crawl delay will continue to adapt alongside the ever-changing landscape of search engine algorithms.

Evolving Algorithms And Crawl Rates

Search engines constantly refine their algorithms to index content more efficiently. With these changes, the importance of setting an optimal crawl rate becomes more evident. A well-configured crawl delay ensures that your site remains accessible and performs well, both for users and for bots.

- Bots become smarter: They gauge server health and adjust accordingly.

- Site updates matter: Frequent updates might call for more frequent crawls.

- Balance is key: Too much or too little crawling can affect site performance.

Staying Ahead With Adaptive Techniques

To maintain a good relationship with search engines, adaptive crawl delay tactics are necessary. These tactics allow for flexibility in how search engine bots interact with your site, taking into account traffic patterns and server load.

- Monitor server logs: Understand bot behavior and adjust settings as needed.

- Use webmaster tools: Set preferred crawl rates through these platforms.

- Automate when possible: Some systems can adjust crawl rates dynamically.

By staying proactive with crawl delay management, your site can maintain optimal performance and visibility in search engine results pages (SERPs). This careful balance helps in fostering a positive user experience and improving SEO rankings.

Frequently Asked Questions

What Is The Purpose Of Crawl Delay?

Crawl delay is a directive used in `robots. txt` that instructs search engine bots how many seconds to wait between successive crawling actions. It helps manage server load and prevent site performance issues.

How Does Crawl Delay Impact Seo?

Setting an appropriate crawl delay can prevent search engines from overloading your server, which might otherwise lead to site downtime. However, setting it too high could slow down the indexing of your content, potentially affecting SEO.

Can Crawl Delay Be Set For Specific Bots?

Yes, crawl delay can be customized for different bots within the `robots. txt` file. You can specify different wait times for each user-agent, allowing you to tailor bot traffic according to server capacity.

Where Do I Configure Crawl Delay?

Crawl delay is configured in the `robots. txt` file of your website. This file is located in the root directory and provides instructions to web crawling bots.

Conclusion

Understanding crawl delay settings can significantly improve your website’s relationship with search engines. By fine-tuning this parameter, you ensure bots navigate your site efficiently without overwhelming your server. Remember, a balanced crawl delay optimizes indexing while keeping site performance intact.

Take the leap and configure your crawl delay to enhance your SEO strategy today!